How do you wish the derivative was explained to you? Here's my take.

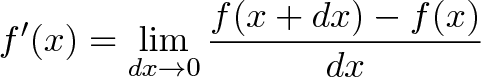

Psst! The derivative is the heart of calculus, buried inside this definition:

But what does it mean?

Let's say I gave you a magic newspaper that listed the daily stock market changes for the next few years (+1% Monday, -2% Tuesday...). What could you do?

Well, you'd apply the changes one-by-one, plot out future prices, and buy low / sell high to build your empire. You could even hire away the monkeys who currently throw darts at newspapers.

Others call the derivative "the slope of a function" -- it's so bland! Like having the magic newspaper, the derivative is a crystal ball that lets you see how a pattern will play out. You can plot the past/present/future, find minimums/maximums, and yes, staff your simian workforce to pick stocks.

Step away from the gnarly equation. Equations exist to convey ideas: understand the idea, not the grammar.

Derivatives create a perfect model of change from an imperfect guess.

This result came over thousands of years of thinking, from Archimedes to Newton. Let's look at the analogies behind it.

We all live in a shiny continuum

Infinity is a constant source of paradoxes ("headaches"):

- A line is made up of points? Sure.

- So there's an infinite number of points on a line? Yep.

- How do you cross a room when there's an infinite number of points to visit? (Gee, thanks Zeno).

And yet, we move. My intuition is to fight infinity with infinity. Sure, there's infinity points between 0 and 1. But I move two infinities of points per second (somehow!) and I cross the gap in half a second.

Distance has infinite points, motion is possible, therefore motion is in terms of "infinities of points per second".

Instead of thinking of differences ("How far to the next point?") we can compare rates ("How fast are you moving through this continuum?").

It's strange, but you can see 10/5 as "I need to travel 10 'infinities' in 5 segments of time. To do this, I travel 2 'infinities' for each unit of time".

Analogy: See division as a rate of motion through a continuum of points

What's after zero?

Another brain-buster: What number comes after zero? .01? .0001?

Hrm. Anything you can name, I can name smaller (I'll just halve your number... nyah!).

Even though we can't calculate the number after zero, it must be there, right? Like demons of yore, it's the "number that cannot be written, lest ye be smitten".

Call the gap to the next number "dx". I don't know exactly how big it is, but it's there!

Analogy: dx is a "jump" to the next number in the continuum.

Measurements depend on the instrument

The derivative predicts change. Ok, how do we measure speed (change in distance)?

Officer: Do you know how fast you were going?

Driver: I have no idea.

Officer: 95 miles per hour.

Driver: But I haven't been driving for an hour!

We clearly don't need a "full hour" to measure your speed. We can take a before-and-after measurement (over 1 second, let's say) and get your instantaneous speed. If you moved 140 feet in one second, you're going ~95mph. Simple, right?

Not exactly. Imagine a video camera pointed at Clark Kent (Superman's alter-ego). The camera records 24 pictures/sec (40ms per photo) and Clark seems still. On a second-by-second basis, he's not moving, and his speed is 0mph.

Wrong again! Between each photo, within that 40ms, Clark changes to Superman, solves crimes, and returns to his chair for a nice photo. We measured 0mph but he's really moving -- he goes too fast for our instruments!

Analogy: Like a camera watching Superman, the speed we measure depends on the instrument!

Running the Treadmill

We're nearing the chewy, slightly tangy center of the derivative. We need before-and-after measurements to detect change, but our measurements could be flawed.

Imagine a shirtless Santa on a treadmill (go on, I'll wait). We're going to measure his heart rate in a stress test: we attach dozens of heavy, cold electrodes and get him jogging.

Santa huffs, he puffs, and his heart rate shoots to 190 beats per minute. That must be his "under stress" heart rate, correct?

Nope. See, the very presence of stern scientists and cold electrodes increased his heart rate! We measured 190bpm, but who knows what we'd see if the electrodes weren't there! Of course, if the electrodes weren't there, we wouldn't have a measurement.

What to do? Well, look at the system:

- measurement = actual amount + measurement effect

Ah. After lots of studies, we may find "Oh, each electrode adds 10bpm to the heartrate". We make the measurement (imperfect guess of 190) and remove the effect of electrodes ("perfect estimate").

Analogy: Remove the "electrode effect" after making your measurement

By the way, the "electrode effect" shows up everywhere. Research studies have the Hawthorne Effect where people change their behavior because they are being studied. Gee, it seems everyone we scrutinize sticks to their diet!

Understanding the derivative

Armed with these insights, we can see how the derivative models change:

Start with some system to study, f(x):

- Change by the smallest amount possible (dx)

- Get the before-and-after difference: f(x + dx) - f(x)

- We don't know exactly how small "dx" is, and we don't care: get the rate of motion through the continuum: [f(x + dx) - f(x)] / dx

- This rate, however small, has some error (our cameras are too slow!). Predict what happens if the measurement were perfect, if dx wasn't there.

The magic's in the final step: how do we remove the electrodes? We have two approaches:

- Limits: what happens when dx shrinks to nothingness, beyond any error margin?

- Infinitesimals: What if dx is a tiny number, undetectable in our number system?

Both are ways to formalize the notion of "How do we throw away dx when it's not needed?".

My pet peeve: Limits are a modern formalism, they didn't exist in Newton's time. They help make dx disappear "cleanly". But teaching them before the derivative is like showing a steering wheel without a car! It's a tool to help the derivative work, not something to be studied in a vacuum.

An Example: f(x) = x^2

Let's shake loose the cobwebs with an example. How does the function f(x) = x^2 change as we move through the continuum?

Note the difference in the last 2 equations:

- One has the error built in (dx)

- The other has the "true" change, where dx = 0 (we assume our measurements have no effect on the outcome)

Time for real numbers. Here's the values for f(x) = x^2, with intervals of dx = 1:

- 1, 4, 9, 16, 25, 36, 49, 64...

The absolute change between each result is:

- 1, 3, 5, 7, 9, 11, 13, 15...

(Here, the absolute change is the "speed" between each step, where the interval is 1)

Consider the jump from x=2 to x=3 (3^2 - 2^2 = 5). What is "5" made of?

- Measured rate = Actual Rate + Error

- 5 = 2x + dx

- 5 = 2(2) + 1

Sure, we measured a "5 units moved per second" because we went from 4 to 9 in one interval. But our instruments trick us! 4 units of speed came from the real change, and 1 unit was due to shoddy instruments (1.0 is a large jump, no?).

If we restrict ourselves to integers, 5 is the perfect speed measurement from 4 to 9. There's no "error" in assuming dx = 1 because that's the true interval between neighboring points.

But in the real world, measurements every 1.0 seconds is too slow. What if our dx was 0.1? What speed would we measure at x=2?

Well, we examine the change from x=2 to x=2.1:

- 2.1^2 - 2^2 = 0.41

Remember, 0.41 is what we changed in an interval of 0.1. Our speed-per-unit is 0.41 / .1 = 4.1. And again we have:

- Measured rate = Actual Rate + Error

- 4.1 = 2x + dx

Interesting. With dx=0.1, the measured and actual rates are close (4.1 to 4, 2.5% error). When dx=1, the rates are pretty different (5 to 4, 25% error).

Following the pattern, we see that throwing out the electrodes (letting dx=0) reveals the true rate of 2x.

In plain English: We analyzed how f(x) = x^2 changes, found an "imperfect" measurement of 2x + dx, and deduced a "perfect" model of change as 2x.

The derivative as "continuous division"

I see the integral as better multiplication, where you can apply a changing quantity to another.

The derivative is "better division", where you get the speed through the continuum at every instant. Something like 10/5 = 2 says "you have a constant speed of 2 through the continuum".

When your speed changes as you go, you need to describe your speed at each instant. That's the derivative.

If you apply this changing speed to each instant (take the integral of the derivative), you recreate the original behavior, just like applying the daily stock market changes to recreate the full price history. But this is a big topic for another day.

Gotcha: The Many meanings of "Derivative"

You'll see "derivative" in many contexts:

"The derivative of x^2 is 2x" means "At every point, we are changing by a speed of 2x (twice the current x-position)". (General formula for change)

"The derivative is 44" means "At our current location, our rate of change is 44." When f(x) = x^2, at x=22 we're changing at 44 (Specific rate of change).

"The derivative is dx" may refer to the tiny, hypothetical jump to the next position. Technically, dx is the "differential" but the terms get mixed up. Sometimes people will say "derivative of x" and mean dx.

Gotcha: Our models may not be perfect

We found the "perfect" model by making a measurement and improving it. Sometimes, this isn't good enough -- we're predicting what would happen if dx wasn't there, but added dx to get our initial guess!

Some ill-behaved functions defy the prediction: there's a difference between removing dx with the limit and what actually happens at that instant. These are called "discontinuous" functions, which is essentially "cannot be modeled with limits". As you can guess, the derivative doesn't work on them because we can't actually predict their behavior.

Discontinuous functions are rare in practice, and often exist as "Gotcha!" test questions ("Oh, you tried to take the derivative of a discontinuous function, you fail"). Realize the theoretical limitation of derivatives, and then realize their practical use in measuring every natural phenomena. Nearly every function you'll see (sine, cosine, e, polynomials, etc.) is continuous.

Gotcha: Integration doesn't really exist

The relationship between derivatives, integrals and anti-derivatives is nuanced (and I got it wrong originally). Here's a metaphor. Start with a plate, your function to examine:

- Differentiation is breaking the plate into shards. There is a specific procedure: take a difference, find a rate of change, then assume dx isn't there.

- Integration is weighing the shards: your original function was "this" big. There's a procedure, cumulative addition, but it doesn't tell you what the plate looked like.

- Anti-differentiation is figuring out the original shape of the plate from the pile of shards.

There's no algorithm to find the anti-derivative; we have to guess. We make a lookup table with a bunch of known derivatives (original plate => pile of shards) and look at our existing pile to see if it's similar. "Let's find the integral of 10x. Well, it looks like 2x is the derivative of x^2. So... scribble scribble... 10x is the derivative of 5x^2.".

Finding derivatives is mechanics; finding anti-derivatives is an art. Sometimes we get stuck: we take the changes, apply them piece by piece, and mechanically reconstruct a pattern. It might not be the "real" original plate, but is good enough to work with.

Another subtlety: aren't the integral and anti-derivative the same? (That's what I originally thought)

Yes, but this isn't obvious: it's the fundamental theorem of calculus! (It's like saying "Aren't a^2 + b^2 and c^2 the same? Yes, but this isn't obvious: it's the Pythagorean theorem!"). Thanks to Joshua Zucker for helping sort me out.

Reading math

Math is a language, and I want to "read" calculus (not "recite" calculus, i.e. like we can recite medieval German hymns). I need the message behind the definitions.

My biggest aha! was realizing the transient role of dx: it makes a measurement, and is removed to make a perfect model. Limits/infinitesimals are a formalism, we can't get caught up in them. Newton seemed to do ok without them.

Armed with these analogies, other math questions become interesting:

- How do we measure different sizes of infinity? (In some sense they're all "infinite", in other senses the range (0,1) is smaller than (0,2))

- What are the real rules about making "dx go away"? (How do infinitesimals and limits really work?)

- How do we describe numbers without writing them down? "The next number after 0" is the beginnings of analysis (which I want to learn).

The fundamentals are interesting when you see why they exist. Happy math.

Other Posts In This Series

- A Gentle Introduction To Learning Calculus

- Understanding Calculus With A Bank Account Metaphor

- Prehistoric Calculus: Discovering Pi

- A Calculus Analogy: Integrals as Multiplication

- Calculus: Building Intuition for the Derivative

- How To Understand Derivatives: The Product, Power & Chain Rules

- How To Understand Derivatives: The Quotient Rule, Exponents, and Logarithms

- An Intuitive Introduction To Limits

- Why Do We Need Limits and Infinitesimals?

- Learning Calculus: Overcoming Our Artificial Need for Precision

- A Friendly Chat About Whether 0.999... = 1

- Analogy: The Calculus Camera

- Abstraction Practice: Calculus Graphs

Leave a Reply

73 Comments on "Calculus: Building Intuition for the Derivative"

I just wanted to let you know that I really appreciate the effort you put into this. I only discovered this website a few days ago, and I’ve been having a blast reading all those intuitive approaches!!

You should consider writing an elementary and highschool book of mathematics, as well as teaching on khansacademy :P

Please keep this flowing :) and if there’s any way we, the audience, can support you, please do mention how!

@AK: Thanks for the comment — really appreciate the support! I’m actually looking at ways to help tap into the community — one idea is getting a little section after each post to share the analogies that worked (or questions that are still outstanding). I’d love certain articles (like the one on e, for example) to become a living reference about “What actually made it click”. Wikipedia is great for strict definitions, Khan and others for detailed tutorials / practice problems, and I’d like to contribute aha! moments (i.e. the last step that turned the light bulb on). Definitely something I’m looking to develop, I’ll be posting on this soon =).

What a great explanation! It took me back to my days as a Physics major in college – only I wish I had this explanation back then :)

Wow, now that’s power. Takes a brilliant mind to break complexity down, making this one of the best sites online!

@Pat: Thanks, glad you liked it! Oh man, how I wish I could go back in time and give myself some tutorials :).

@Zaine: Thanks, I really appreciate it!

Joshua Zucker emailed me after the comment form ate his reply, pasting below:

Apparently my long comment on your recent post got eaten somewhere

along the line. Darn.

Anyway, my point was that you really misrepresent integrals. They’re

easier than derivatives, not harder. It’s antiderivatives that are

tough, and although the fundamental theorem says they’re the same as

integrals, the whole point of the theorem is that there’s something

meaningful to say there! Well, actually, antiderivatives aren’t

really tough, it’s just that we’re picky about wanting to write them

in terms of certain kinds of functions, which is your “break lots of

plates” analogy. We know exactly how the pieces were made, so we can

just glue them back together. The hard part is recognizing the brand

name of the plate when we’re done, not reassembling the plate.

You also seem inconsistent about saying in your intro that you can use

the rate of change to reconstruct the future prices, and then later

saying that putting the pieces back together is hard. Integrals are,

as you say “better multiplication” — you just have to multiply and

add.

There is lots and lots of good stuff in the post too, of course! I

particularly love the idea of the derivative as an inference of what

the perfect tool would measure, from approximations using imperfect

tools. I don’t think I ever thought of it as a tool in quite that

sense, and it’s a useful thing. I mean, I have thought of the

derivative at a point as a local property, and the derivative as an

operator that maps functions to functions, but this feels more like a

caliper that is open to some finite amount and then you’re reducing

that amount to see what’s going on; it captures more of the limit

process in there.

Oh, one more note: Oddly, I’m totally comfortable with the idea of dx

= the next number right after 0, or the jump between “adjacent” real

numbers, but I am really bothered by the analogy of dividing 5

infinities by 2 infinities of points to get 5/2.

====

Hi Joshua,

Great feedback — I think the nuances of integrals vs. anti-derivatives were previously lost on me :). After a little reading (http://mathforum.org/library/drmath/view/53755.html) I think I’m up to speed:

* Integration is literally the process of gluing the pieces together (mechanical, finding the sum of many products)

* Anti-derivatives are the function whose derivative is f (i.e., the “brand” as you say)

The essence of the FTOC (which I’ve previously missed) is that Integrals are *computable* from anti-derivatives, which is pretty amazing. Literally gluing pieces isn’t hard, but saying “this reconstructed plate is an Ikea Furjen” is the tricky part (realizing what function, easily defined, would create such an integral).

“…the idea of the derivative as an inference of what the perfect tool would measure, from approximations using imperfect tools” — I love this concise description, that’s exactly it. Yes, in this context it’s like a little caliper which is prodding, only to disappear again to help figure out a greater result. The operator and local property / slope interpretations are other ones to switch between. When writing this article, I was ruminating on the purpose of limits, which always bothered me because they were ignored so often in engineering classes (even though the derivative wasn’t!). In this case, limits were mathematical scaffolding.

The 5 vs 2 infinities doesn’t quite sit right with me either — it’s my gut screaming for there to be “some” way to move through an infinitude of points. My analysis knowledge is very limited, but perhaps something like a Lebesgue measure could capture this notion (that 0-5 is a larger infinite range than 0-2)? (http://en.wikipedia.org/wiki/Lebesgue_measure).

Really appreciate the discussion, I love refining these thoughts! I’ll update the article soon, as I get my intuitions in order.

===

Josh: I think a better analogy is this:

Integration is piling all the shards on a scale and reading the total.

Antidifferentiation is putting the shards carefully back together in

exactly the right order and recognizing the plate.

I dont understand cumulative frenquency so well.pls help

i need more details on how to solve the partial fractions and integrations.

It is realy interesting. I have enjoy it ..nd lear a lot. Today I unmderstood What is Derivative ? Actually I am searching this but give us. Than you so much. Please give the this opportunity to learn math.

Khalid,

Long-time lurker, first-time poster. Firstly, just wanted to say congrats on all your work here, really impressive. This is my favourite maths site on the web; I see the seeds of an educational revolution here. Reminds me of the time I got a weighty book ”Applying Maths in the Chemical and Biological Sciences”..I was hoping for an interesting novel, what I got was almost pure grammar, i.e. I was looking for semantics but all I got was syntax. Your articles explain the meaning, i.e. utility, of these abstract notions. Your complex numbers article helped solved the riddle of how ”imaginary numbers” could be use in the real world, so thanks!

Like the (modified!) analogy for the distinction of integral and anti-derivative, which was yet another one of those esoteric relationships that was never explored in high school; are you going to amend the original article?

Regards,

John

[…] From BetterExplained […]

@Bassman, Ogbuka: I’ll take those as suggestions for future topics, thanks.

@Asmaul: Glad it was helpeful!

@John: Thanks for the note, really appreciate it! I hear you, so many math explanations just focus on the grammar, like the lifeless language classes that nobody ever seems to learn from (contrasted with learning a language by actually being immersed in it and speaking it, vs. trying to crunch through the rules like a computer).

I’m going to update the article right now with the new integral/anti-derivative analogy. Thanks again for posting!

This came at a pretty good time for me since its publication coincided with my own autodidactic journey through math! I was fresh into calc/derrivatives when this came and I skimmed through, initially getting about half of it. Then while walking my dogs today I got deep into thinking about really understanding derrivatives after a few plug and chug sessions, and I begun recalling what you had written (especially regarding the “actual rate+error” part) and the superman analogy.

In retrospect it was a good thing I was walking in the barren woods because the unconcious “OOOOOOOOOOHHH!” of my aha moment was so loud. My dogs didn’t seem to care though, they were busy pooping and such.

Thank you, thank you, thank you!

@Anonymous: Awesome, I’m glad the aha! came :). I’m planning on making some changes to the site to help share and discuss the individual aha! moments, really appreciate the note!

Khalid,

This is great! Derivatives were always out of focus to me but this is helping clear things up.

Sebastian

@Sebastian: Thanks, glad it helped :).

Hey Kalid, another great article!

But I noticed something; couldn’t you just, instead of even doing all the other math, just take the exponent of the original number, multiply the number in front of it and then minus one from the exponent? if you didn’t get that, here’s what I mean: the derivative of x^2=2*1(x)^(2-1), which equates to 2x. It also works in the reverse of finding the original number using the derivative: 10x^1;10x^(1+1)=10x^2; (10/2)x^2=5x^2. Should I have put this here, or on your new aha moents and FAQ thingy?

Thank you,

Just a kid.

@just a kid: Thanks for the comment! For posting, either method is fine! The aha!/FAQ thingy is a way to have longer discussions, since regular wordpress comments don’t have threading (and the discussions could get hard to follow).

Your shortcut definitely works (take the exponent, decrease by one). It’s neat to see why this works: if we’re taking the derivative of x^n (x raised to some power), we make a model like this:

[(x + dx)^n – x^n ] / dx

= [(x^n + Something * x^(n-1) * dx + Something2 * x^(n-2) * dx^2 + …) – x^n ] / dx

= Something * x^(n-1) + Something2 * x^(n-2) * dx

Most of the other terms go away because we want dx to be zero (i.e., assume a perfect model). We’re left with

Something * x^(n-1)

And what is the “Something? Well, it’s the number “n” (this is due to the Binomial Theorem), more details here: /articles/how-to-understand-combinations-using-multiplication/

But yep, you got it — there’s a shortcut to figure out how the derivative of a regular polynomial (x^n) will behave :).

This is a fair explanation of the theory behind derivatives; but I like how Wilberger explains and motivates tangent curves (which are directly and simply related to derivatives). Not only does he NOT use the idea of “dx” (which doesn’t actually exist in any system of numbers beyond the integers, since there is no unique number that is closest to zero), but he winds up defining the theory so that it works on arbitrary algebraic curves (not only functions).

Check it out — look at his (njwilberger’s) Math Foundations series on YouTube. Most people reading here will be able to skip to something like the episode on doing calculus on the unit circle, but don’t expect to understand EVERYTHING if you do that. The interesting thing is that he defines this without using limits at all; the essential point is that he uses “the nth degree polynomial that best approximates the surface at that point” (of course, this is the Taylor expansion at that point).

-Wm

@wm: Thanks for the pointer! I’ll check it out.

[…] The derivative f’ (df/dx) is the moment-by-moment behavior […]

Really great stuff. Mathematics is the foundation of all science and science is the compass to help us navigate the universe. Keep up the good work. Very much appreciated.

@Still learning: Thanks — really appreciate the encouragement!

Hi Khalid,

Great article. I have always been fascinated by calculus and always wanted to decipher the true meaning of derivative. Your article gives me a great insight. However I would beg you to clarify the following confusion that has arisen.

We all know that derivative of Y = X^2 is 2x. when you calculate values of y for x=2 and 3, you get y = 4 and 9 respectively. The change in y here is 9-4 = 5. However if I substitute x= 2 in the derivative function dy/dx it gives me 2x = 4. you showed us why this difference exists. It is because of the dx factor (Shoddy instrument). But the reality is that y changed by 5 units when x changed from 2 to 3. Are you saying that dy/dx or derivative is not here to calculate rate of change for such large changes and if you use it for large changes results are inaccurate. Does that mean that dy/dx can only be used to calculate very small changes.

Earlier I thought if you want to find how a function f(x) is changing w.r.t x between 2 values without substituting the values, just calculate the derivative and substitute x but it seems I was wrong?

Also I didn’t understand when you say

The derivative is 44″ means “At our current location, our rate of change is 44.”

Change is a relative term. How can there be a change at a current location. It has always got to be between two locations.

Heya, I just hopped over to your web-site through StumbleUpon. Not somthing I would typically browse, but I enjoyed your thoughts none the less. Thank you for making some thing worth reading through.

Sudar, I understand your confusion.

Your last paragraph is the most important. The differential MIGHT be understood as the rate of change at a single point, but it also might be confusing if you think of it like that. It’s important that you see that the differential is not the same thing as the difference

MIGHT be understood as the rate of change at a single point, but it also might be confusing if you think of it like that. It’s important that you see that the differential is not the same thing as the difference  . The difference requires two different point; the differential takes only one point.

. The difference requires two different point; the differential takes only one point.

Another way to think of the differential is that it’s the slope of the line that best approximates the curve at that point. This definition gives you some surprising algebraic power — and it also suggests some other operations, such as the “linear subderivative”, which is the _line_ that best approximates the curve, and from that the “quadratic subderivative” (and so on). These are very cleanly defined operations on algebraic curves, and require only algebra, no analysis or limits.

-Wm

The derivative is a concept that relates a continuous property( average change ) to a discrete one (instantaneous change). Even if one had a perfect instrument to measure instantaneous change, one wouldn’t be able to – because of our conception (and consequent definition) of speed.

To properly understand a derivative you would need the concept of a limit. Limits are to calculus what de Broglie’s wavelength is to quantum physics (it bridges the gap between wave and particle properties – between discrete and continuous)

Also limit is not a way to make the derivative work. It is just one application of limit.

In physics and signal theory certain functions are so complicated that you have to use limits to define them – we call them generalized functions.

The derivative can be taught without limits ( since the derivative deals with rate of change ) but if you are introducing infitesimals then i think you could have introduced the limit too.

Nikhil, you do not need limits or infinitesimals to properly understand the derivative. The derivative is sufficiently understood as the slope of the line tangent to a curve at a point. This geometric understanding does not invoke limits or infinitesimals. You can add in limits to this definition to handle piecewise continuous curves, but as-is this definition can handle arbitrary curves, rather than being limited to functions.

If one is learning general calculus then infinitesimals are essential; but if one is learning the derivative they are not, and therefore no limits are needed. Iverson actually wrote a Calculus text without using limits, and he only used infinitesimals informally. It’s available online at http://www.jsoftware.com/jwiki/Books. Aside from that oddity, the text is notable for its computational focus and for its treatment of some advanced theoretical topics such as fractional integrals (Wikipedia calls this the “differintegral”).

-Wm

” Nikhil, you do not need limits or infinitesimals to properly understand the derivative. “

I never said that you need limits to understand the derivative. Read the last paragraph of my comment.

In your explanation, you talk about infinity and the continuum. What I was saying is – that the conceptual leap from there to that of a limit is very small. So there is no need to avoid the concept of a limit.

One doesn’t need the epsilon – delta definition to introduce the concept of a limit.

Ak I applaud you for making the seemingly abstract become apparent. The manner in which you use choice words only adds to your gift of teaching. I’ve read you on other articles and you continue to be the king of explaining the complex in terms of understandable language .

Jason