Think of the dot product as directional multiplication. Multiplication goes beyond repeated counting: it's applying the essence of one item to another.

Typical multiplication combines growth rates:

- "3 x 4" can mean "Take your 3x growth and make it 4x larger, to get 12x"

- Complex multiplication combines rotations.

- Integrals do piece-by-piece multiplication.

If a vector is "growth in a direction", there's a few operations we can do:

- Add vectors: Accumulate the growth contained in several vectors.

- Multiply by a constant: Make an existing vector stronger.

- Dot product: Apply the directional growth of one vector to another. The result is how much stronger we've made the original (positive, negative, or zero).

Today we'll build our intuition for how the dot product works.

Getting the Formula Out of the Way

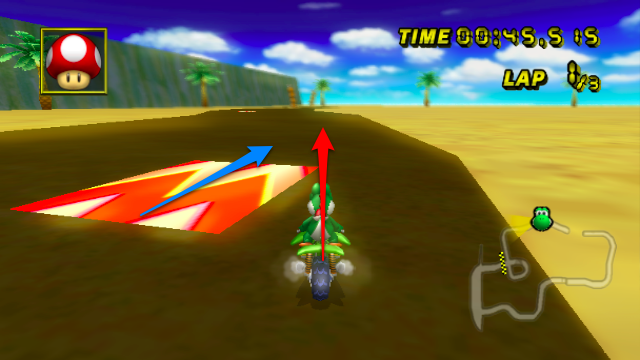

You've seen the dot product equation everywhere:

![]()

And also the justification: "Well Billy, the Law of Cosines (you remember that, don't you?) says the following calculations are the same, so they are." Not good enough -- it doesn't click! Beyond the computation, what does it mean?

The goal is to apply one vector to another. The equation above shows two ways to accomplish this:

- Rectangular perspective: combine x and y components

- Polar perspective: combine magnitudes and angles

The "this stuff = that stuff" equation just means "Here are two equivalent ways to 'directionally multiply' vectors".

Seeing Numbers as Vectors

Let's start simple, and treat 3 x 4 as a dot product:

![]()

The number 3 is "directional growth" in a single dimension (the x-axis, let's say), and 4 is "directional growth" in that same direction. 3 x 4 = 12 means we get 12x growth in a single dimension. Ok.

Now, suppose 3 and 4 refer to different dimension. Let's say 3 means "triple your bananas" (x-axis) and 4 means "quadruple your oranges" (y-axis). Now they're not the same type of number: what happens when apply growth (use the dot product) in our "bananas, oranges" universe?

- (3,0) means "Triple your bananas, destroy your oranges"

- (0,4) means "Destroy your bananas, quadruple your oranges"

Applying (0,4) to (3,0) means "Destroy your banana growth, quadruple your orange growth". But (3, 0) had no orange growth to begin with, so the net result is 0 ("Destroy all your fruit, buddy").

![]()

See how we're "applying" and not simply adding? With regular addition, we smush the vectors together: (3,0) + (0, 4) = (3, 4) [a vector which triples your oranges and quadruples your bananas].

"Application" is different. We're mutating the original vector based on the rules of the second. And the rules of (0, 4) are "Destroy your banana growth, and quadruple your orange growth." When applied to something with only bananas, like (3, 0), we're left with nothing.

The final result of the dot product process can be:

- Zero: we don't have any growth in the original direction

- Positive number: we have some growth in the original direction

- Negative number: we have negative (reverse) growth in the original direction

Understanding the Calculation

"Applying vectors" is still a bit abstract. I think "How much energy/push is one vector giving to the other?". Here's how I visualize it:

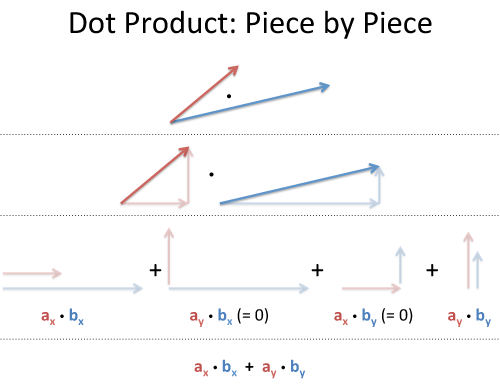

Rectangular Coordinates: Component-by-component overlap

Like multiplying complex numbers, see how each x- and y-component interacts:

We list out all four combinations (x with x, y with x, x with y, y with y). Since the x- and y-coordinates don't affect each other (like holding a bucket sideways under a waterfall -- nothing falls in), the total energy absorbtion is absorbtion(x) + absorbtion(y):

![]()

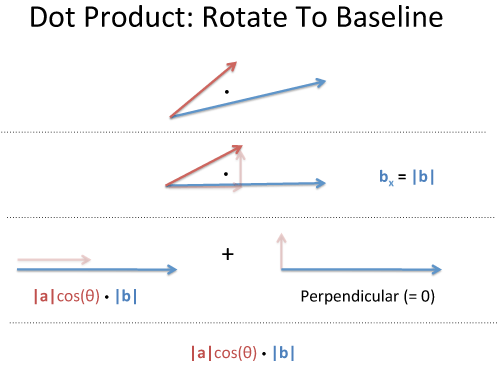

Polar coordinates: Projection

The word "projection" is so sterile: I prefer "along the path". How much energy is actually going in our original direction?

Here's one way to see it:

Take two vectors, a and b. Rotate our coordinates so b is horizontal: it becomes (|b|, 0), and everything is on this new x-axis. What's the dot product now? (It shouldn't change just because we tilted our head).

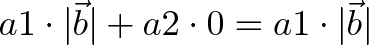

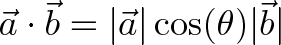

Well, vector a has new coordinates (a1, a2), and we get:

a1 is really "What is the x-coordinate of a, assuming b is the x-axis?". That is |a|cos(θ), aka the "projection":

Analogies for the Dot Product

The common interpretation is "geometric projection", but it's so bland. Here's some analogies that click for me:

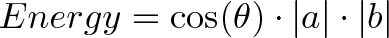

Energy Absorbtion

One vector are solar rays, the other is where the solar panel is pointing (yes, yes, the normal vector). Larger numbers mean stronger rays or a larger panel. How much energy is absorbed?

- Energy = Overlap in direction * Strength of rays * Size of panel

If you hold your panel sideways to the sun, no rays hit (cos(θ) = 0).

But... but... solar rays are leaving the sun, and the panel is facing the sun, and the dot product is negative when vectors are opposed! Take a deep breath, and remember the goal is to embrace the analogy (besides, physicists lose track of negative signs all the time).

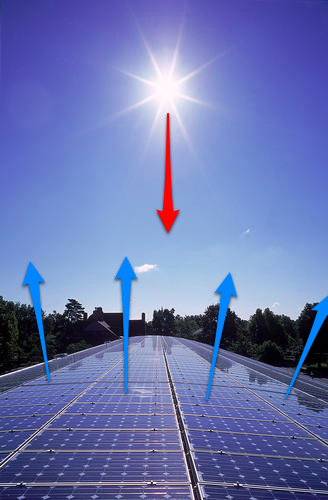

Mario-Kart Speed Boost

In Mario Kart, there are "boost pads" on the ground that increase your speed (Never played? I'm sorry.)

Imagine the red vector is your speed (x and y direction), and the blue vector is the orientation of the boost pad (x and y direction). Larger numbers are more power.

How much boost will you get? For the analogy, imagine the pad multiplies your speed:

- If you come in going 0, you'll get nothing [if you are just dropped onto the pad, there's no boost]

- If you cross the pad perpendicularly, you'll get 0 [just like the banana obliteration, it will give you 0x boost in the perpendicular direction]

But, if we have some overlap, our x-speed will get an x-boost, and our y-speed gets a y-boost:

![]()

Neat, eh? Another way to see it: your incoming speed is |a|, and the max boost is |b|. The amount of boost you actually get (for being lined up with it) is cos(θ), for the total |a||b|cos(θ).

Physics Physics Physics

The dot product appears all over physics: some field (electric, gravitational) is pulling on some particle. We'd love to multiply, and we could if everything were lined up. But that's never the case, so we take the dot product to account for potential differences in direction.

It's all a useful generalization: Integrals are "multiplication, taking changes into account" and the dot product is "multiplication, taking direction into account".

And what if your direction is changing? Why, take the integral of the dot product, of course!

Onward and Upward

Don't settle for "Dot product is the geometric projection, justified by the law of cosines". Find the analogies that click for you! Happy math.

Other Posts In This Series

- Vector Calculus: Understanding the Dot Product

- Vector Calculus: Understanding the Cross Product

- Vector Calculus: Understanding Flux

- Vector Calculus: Understanding Divergence

- Vector Calculus: Understanding Circulation and Curl

- Vector Calculus: Understanding the Gradient

- Understanding Pythagorean Distance and the Gradient

Leave a Reply

84 Comments on "Vector Calculus: Understanding the Dot Product"

This can be a really tough one, and to be honest I don’t find many of the analogies very useful. I’m a video game programmer so I need the dot product a lot, and I still find it easiest to think in terms of projection, as sterile as it may sound. I think of the dot product as the component of the first vector in the direction of the second. Or, if you think of the second vector as a hyperplane (more sterile, mathematical terms), how long would the first vector be projected onto it?

I love this site, and I don’t comment often, but I think you do a great job, and I’m sure I’ll be pointing my son at your articles before too long (he’s nearly 1)

As a matter of professional curiosity, are you certain that the Mario Kart boost pads only give you a boost proportional to your alignment? Or do they just give you a constant boost so long as you are crossing them in the correct direction?

@wererogue: Thanks for the feedback! You bring up some great points.

1) Yep, projection is only sterile because the term is unfamiliar (but not the concept). Another might be “What is the shadow of one vector on the other?”. One subtlety I should have mentioned was that the dot product gives a plain number (i.e. total energy absorbed) and not a new vector on your own (when I think “projection”, I think getting a new, likely shorter, vector that is in the direction of the first).

2) Awesome, looks like your son will be getting off to a head start :)

3) The real mario kart boost pads probably just add a constant amount to your speed, irrespective of direction (I should clarify that). My analogy was just to see “Hey, one vector is determining the multiplier effect, and the other the incoming direction.”

I’m no expert but just giving my 2c regarding my experience of reading this article:

I found your description of the different dot product equations as cartesian vs polar based very useful. I only groked that the morning after reading.

Also, the ‘Piece by Piece’ illustrated well why we add only products of the like-for-like axes and discard the rest (because they’re perpendicular and always equal zero!)

What worked for me (after reading ‘Piece by Piece’) is to think of the vectors from an abstract POV – neither as cartesian/polar. From there, we decide whether to decompose down to cartesian/polar. Polar is much more easy to intuit. Cartesian less so, but I think I see it now – you can decompose down to any any basis you like as long as it’s orthonormal – doing so lets you discard the orthogonal pairs of axes and end up with the simple ax.bx+ay.by+az.bz sum. If they aren’t orthonormal then the “optimisation” cannot be done.

I didn’t find the analogies 100% either, but then again I haven’t really found a 100% analogy yet anywhere – I’d like people to share theirs if they have one. The ‘shadow’ one works well, but it’s always the fact that you multiply by the magnitude of the vector you’re projection onto which doesn’t quite fit.

I did find them quite inspiring though, especially that of Mario-Kart. I too noticed that the gameplay might differ from your description, but it’s not too hard to imagine a game world where the dot product would be the resultant behaviour! In fact that might be quite an interesting demo: Dot-product racing. :)

I just found this site and I like it alot, thans! I use the dot product a lot as well and I also think in terms of projection. But, as a matter of fact, I never miss the oportunity to think about the symetry the dot product inherents and I feelit is missing in the text. If you think in terms of projection, you can think “the first vector, projected on the second” or “the second vector, projected on the first.” The point is to understand that both properties are equal and that is not very intuitive!

However, I love your way to use the dot product piecewise for each combination of dimensions and you can explain the distributive property of the operation with it. Very nice, I like it!

Much agreed with blub – the symmetry needs to be exposed more somehow.

I think this is half-exposed with Dot Product: Rotate to baseline, where the x-axis is used as the baseline. Perhaps the symmetry can be partially represented by rotating CCW to a y-axis baseline.

I’ve did some electrical engineering and some computer engineering and plain mathematics and although I’ve never really encountered problems with laws of physics it has almost always been just formulas for me. It’s not like I did not have some way of expressing it but wow do i feel like I actually know stuff now! Know what is actually going on and not just mechanically applying the formulas to get the solution of a problem …

@Justin: Thanks for the feedback — it’s really helpful to know what’s working (or not).

It took me a while to realize the cartesian / polar versions as well – now, when I see that “equation foo = equation bar” I really try to see “Ok, what perspective does the foo-side have? The bar side?”.

Yes! What you said about vectors is exactly it — they exist (abstractly) and here are two possible ways to describe it. There’s probably more, but polar/cartesian are the common ones.

Yes, the analogies aren’t 100% for me (probably 90%), shadow is maybe 95%, there might be an even better one out there (as they come in I’ll be amending the article).

Dot product racing sounds like an interesting idea for gameplay mechanics :).

@blub: Great point on the symmetry – it’s something I don’t have the best intuitive grasp for either. For the piece-by-piece it makes algebraic sense. For the “projection” side, not as much — why would the projection of A onto B be the same as B onto A?

One way to think about it: a vector is really a direction and magnitude. When we write (10, 10) that’s really a shorthand for “the 45-degree angle, scaled up some amount — 10x the unit circle”. So when we’re doing the dot product, we can save the scaling for the end (find the projection on the unit circle, then scale up by each amount). Because otherwise, it’s weird that projecting (10,10) onto (200, 0) would give a different result than projecting onto (2000, 0). Why does it matter that the vector being projected “onto” is larger? (That’s why plain “projection” doesn’t click nicely with me).

@nik: Awesome! Yes, the goal for each article is to move beyond mechanical formula applications, glad if it helped!

[…] Vector Calculus: Understanding the Dot Product A vector is “growth in a direction”. The dot product lets us apply the directional growth of one vector to another: the result is how much we went along the original path (positive progress, negative, or zero). […]

i lov dis page is so easy 2 undastand simply outstanding

Great post – I really liked the stpe-by-step breakdown of the different ways to look at it and I could imagine that the mario kart example would go down really well in class.

I found this discussion refreshing especially when combined with Professor Strang’s Linear Algebra Course on MIT open courses. Also having spent my first Saturday Night with AdaFruit I loved the kill your fruit analogy. As you fill this out a comparison with the cross product and some of the geometry of “in the plane” versus out of the plane would be great. Before I started to regularly come to this site to have my mind blown I did it on such simple realization as “the cross product of any two vectors in a plane gets you a vector normal to the plane.” Off to make some fruit salad…

@mark: Thanks for dropping by! Ah, I remember we used Prof. Strang’s book in our course too, sadly I don’t remember enough linear algebra (especially an intuition for eigenvectors / eigenvalues) but I’m looking forward to getting re-acquainted with it :).

@Daniel: Thanks — if you end up trying it, I’d love to know how it worked out! I’m still looking for really vivid analogies of what’s happening.

One of the most difficult things in mathematics isn’t failing to understand the initial concept (the one we’re first introduced to at school). Instead, the difficult thing in mathematics is to understand the generalised concept. You seem to have covered everything—and it was useful to me—but I don’t believe you’ve explained very well why it becomes a scalar.

Lets rewrite the first equation a bit:

vec{a} cdot vec{b} divided by |vec{b}| = |vec{a}| cos(theta)

or in words (arguing from the right side of the equation): to get the magnitude of the component of vector a in the direction of vector b, take the dot product of vectors a and b and divide by the magnitude of vector b. (Remember that “vec{b} / |vec{b}|” is the unit vector e_b.) This sounds remarkably similar to a coordinate transformation, specifically from polar to Cartesian coordinates. (I know you mention it, sorry)

But there is a second transformation happening at the same time. We’re changing from vectors to scalars. The “projection” doesn’t really explain that (at least it never did to me). Your question is better: “What is the x-coordinate of a, assuming b is the x-axis?” The thing to remember though, is that we’re simultaneously applying a parametrisation! That is why the dot product occurs in Line Integrals!

To summarise:

Step 1: take the component of vector a in the direction of vector b (it’s still a vector)

Step 2: alter the size of said component by multiplying it with |b|. (it’s still a vector)

Step 3: apply parametrisation to change it from a vector to a scalar.

That last part makes sense out of Line Integrals involving Work (and Energy): we’re parametrising vector a along curve C (which is defined by position vector b)

Hope it helps! (And I hope I didn’t make any mistakes.)

I forgot to mention that the context of the dot product determines whether its symmetry is important; whether its parametrisation is important; … and so on. The context also determines what we’re multiplying—and what our result represents (e.g. your: “What is the x-coordinate of a, assuming b is the x-axis?”).

Which is why I always had difficulty with the dot product. The dot product of a vector with itself, is conceptually completely different. Especially when you contrast it with the use of a dot product in line integrals.

Thanks.

P.S. Love your integral explanations:

Integrals are “multiplication, taking changes into account”; and…

the dot product is “multiplication, taking direction into account”.; and…

Line integrals are “multiplication, taking into account changes both in magnitude and direction”.

@alex: Really interesting comments! In my head, the dot product becomes a scalar because it’s the result of a directional multiplication, i.e. “Pretend we’re doing a regular 1-dimensional multiplication, but with “b” as the axis to use –what is the result?”

But, the transition from a vector to a scalar is interesting. So, the thinking is the “parameterization” is how far “a” is going along the curve defined by b (writing a in terms of b).

This is a little funky to me, because I normally think of parameterizations as being non-destructive, i.e. writing a circle as [cos(t), sin(t)] leaves the shape intact, but was defined using a different variable. I might not have the right terminology though.

Maybe another way to put it:

“b defines a path. If we go in direction a, how far do we move along the path?” (We can ignore scaling effects). For the line integrals, we have a curve are moving along — how much energy is going along this path? We can parameterize the input energy [vector a] and say “Oh, now we’re going to rewrite a in terms of how much energy it’s putting along the curve.”

I think it’s a neat idea :). (Glad you enjoyed the integral explanations, I’m constantly refining my understanding of seemingly fundamental operations).

@kalid, you said: “Pretend we’re doing a regular 1-dimensional multiplication, but with “b” as the axis to use –what is the result?”

Now I get it. Basically, you rotate vector ‘a’ to point to the same direction as vector ‘b’. Then you shrink the vector by a factor of “cosine theta”. Then you enlarge the vector by |b| … (only it has stopped being a vector, argh!)

Although…, if you stop thinking about it as multiplying 2 vectors—and instead see it as multiplying a 1X3 matrix with a 3X1 vector—then it easier to see it as just a multiplication. (Really, give me the cross product any day, as it doesn’t change its conceptual meaning as often.)

You are right that parametrisation is non-destructive. But (to use your example) you go from describing a circle in ‘x’ and ‘y’, to describing it in just one parameter ‘t’. Basically, the dot product combines a coordinate transformation and a parametrisation.

Which is why we always encounter dot products in line integrals. We are interested in the work done while moving along a curve inside a vector field (as an example). The coordinate transformation makes sure we only use the component of the vector that’s parallel to the direction we’re moving. The parametrisation makes sure we reduce the number of variables.

Moving away from line integrals. Taking into account the symmetry of the dot product, you’re “multiplication, taking direction into account” might be better described as a partial multiplication due to the fact that the vectors point in different directions. In other words:

|a| |b| cos(theta) = |a| ( |b| cos(theta) ) = ( |a| cos(theta) ) |b|

in that way, it makes clear that it doesn’t matter which of the two vectors you take as axis.

Off course, in the context of line integrals it does matter (at least conceptually) which vector you take as axis, because only one will describe a path.

It is fun to realise that some of the most difficult concepts in mathematics are much easier than we thought. An integral for example is nothing more than an advanced form of an advanced form of addition. LOL.

I thought some more about this. Your explanation lead me to try and wrap my head around line integrals and their use of the dot product. But…

You are absolutely right that the dot product in itself is just multiplication taking direction into account. Changing the vector notation into matrix notation makes that clear. And yet…

I asked myself: Why do we use the dot product? Well, we want an answer to a specific question, don’t we? That got me thinking about what vectors represent; which is “magnitude” and “direction”. So, when we multiply vectors we multiply both magnitude and direction.

Except…we don’t always care equally about magnitude and direction! When we only care about the magnitude of vectors, we don’t even bother with vector notation!

In the case of the dot product we care about the direction of vector ‘a’ with respect to vector ‘b’ (the direction of vector ‘b’ with respect to vector ‘a’). In other words we care about the RELATIVE DIRECTION—which explains why it is a scalar.

This raises the question: “How do we multiply vectors when we care about the absolute direction?” For a two dimensional vector the answer is easy; we convert the vector to a complex number and multiply complex number ‘a’ with complex number ‘b’.

In a similar way, this explains the multiplication of a Nx1 matrix with a 1xN matrix to get a NxN matrix. But in this case, we care about multiple direction (instead of relative direction, or absolute direction). The Jacobian is a good example.

Similarly, the cross product. We care about direction, but we only care about the direction perpendicular to both vector ‘a’ and vector ‘b’.

So vector multiplication is about multiplying a pair of numbers (magnitude and direction), whereby we take into account ‘direction’ to varying degrees.

To summarise, I would suggest changing “…the dot product is ‘multiplication, taking direction into account’.” into:

The dot product is multiplication while taking relative direction (or the difference in direction) into account.

Anyway, thank you for explanations like these. They help me to understand the basic mathematical idea—instead of the more specific physical idea—behind mathematical concepts like these.

Thanks.

Excellent article, as always. As a current engineering student, I’m going to be eternally grateful that I took my statics and dynamics courses BEFORE vector calculus, so I had a really good intuition on the physical uses of dot and cross products that helped me wrap my head around the mathematical intricacies. Sometimes there’s a benefit to be had from teaching things in the “wrong” order like that. Anyway, keep it up, I look forward to reading your thoughts on the cross product soon–the relationship between the cross product and the determinant is still one of the biggest ‘mystery math’ things out there for me.

@alex:

Yep, totally agree with the grouping — it shows the symmetry by thinking of a onto b, or b onto a. And yep, with line integrals, you usually have a defined Force and Path vector (it’s not as intuitive to project the Path onto the Force).

I love breaking down complicated ideas into easy-to-digest parts… yep, the integral is a wrapper around the idea of “applying” something piece by piece (of which multiplication and repeated addition are very simple base cases).

On the vector notation: I guess it depends on how deep you want to go :). For me, I take it as an axiom that “we want to figure out how much one vector pushes in the direction of the other, it will be a single number (called the dot product) and here’s why it should be what it is (i.e., x and y components interacting)”

Yes, relative direction is a good way to put it — the absolute doesn’t matter. In a system of relative coordinates (just angles between them), what do we know about the force of one onto the other? (Interestingly, I stumbled upon the concept of coordinate-free geometry today).

When we care about absolute direction, yep, we want different results when vectors that are 90 degrees apart but oriented differently are combined. I think we need to be careful with complex numbers though (for terminology) since the typical dot product gives 0 when perpendicular, but complex numbers do a rotation.

I might tweak it to “the dot product is multiplication, taking the difference in direction into account”. (I’ll have to tweak it). WIth all of these 1-liners, it’s a tradeoff between an immediate click vs. getting into too much detail.

And the feeling for these discussions is mutual, I love exploring these ideas!

@Joe: Thanks Joe. I agree, there’s an interplay between theory and application (I learned vector calc before E&M, and having physics examples definitely solidified my earlier understanding). I don’t think you can teach in some pyramid where the base ideas are learned, divorced from examples, and then the applications are sprinkled in later.

Appreciate the encouragement, I’d love to do the cross product & determinant in the future! (Two concepts I really, really want to get an intuition for & not just the mechanical calculation).

I’d love to feature this post in the Math and Multimedia Carnival #21 on my site, Math Concepts Explained. Please let me know! My site is at http://sk19math.blogspot.com. Thanks!

@Shaun: Definitely! I’ve emailed you as well, but feel free to use the content as you need. Thanks for asking!

[…] /articles/vector-calculus-understanding-the-dot-product/ RedditBufferShareEmailPrintFacebookDiggStumbleUpon […]

Kalid, thanks for the great article and even more for your wonderful “mission” :)

There’s still something that doesn’t click to me about the dot product, hopefully you’ll be able to give me a hint.

Formally, I can see that the two definitions, ax*bx+ay*by and |a||b|cos(t), are equivalent. Given what I expect to be the meaning of the dot product, I can figure out why summing the products of pairwise parallel components and multiplying the vector lengths times cos(t) both “make sense”, but it’s not straightforward for my intuition to realize why do they make exactly the same sense. Why are those reasonable measures of “weighted parallelism” (i’m just trying to give a more meaningful name to the dot product than “dot product”, but it doesn’t really matter) exactly the same?

My only way to help intuition is to have intuitive arguments to derive (1) the latter from the former and (2) the former from the latter (I’m not talking about formal proofs here, those are available everywhere). Thanks to your great article, I can now see path (1), but I still miss an intuition for path (2).

More precisely, I can see that ax*bx+ay*by is invariant wrt to rotation, and therefore I can choose one particular frame where bx=|b|, by=0 and ax=a*cos(t), which leads to |a||b|cos(t). That’s path (1) and it gives my intuition a strong hint on the equivalence of the two definitions. Thank you for that.

However, should I start from |a||b|cos(t) and try to derive ax*bx+ay*by, I’d be kind of lost. I could make it to the point that, being |a||b|cos(t) obviously invariant wrt to rotation as well, I could fix one frame where |b|=bx and |a|cos(t)=ax, and that would cover the ax*bx part, which in this particular frame gives the value of the dot product. But what’s the intuition that makes me generalize it to ax*bx+ay*by? How do I come to the point that I tell myself “hey, of course I need to add the “ay*by” there!”?

Thank you once again for your great contributions :)

Regards,

Andrea

Hi Andrea, thanks for the comment. I’m similar — I can go from ax*bx + ay*by to |a||b|cos(t), but not vice-versa. I think it’s because I don’t have a good, geometric intuition for the cosine rule (probably a good candidate for another article!).

I really like your phrase “weighted parallelism”, I love finding ways to interpret fundamental operarations (I agree that “dot product” is not enlightening…it’s just then name of the operator symbol!).

My high level intuition is that “weighted parallelism” is a fundamental concept (it exists on its own), which can then reduce to rectangular [ax*bx + ay*by] or polar (|a||b|cos(t)) coordinates. I see it like naming an object in the real world: we can say “apple” or “manzana” (Spanish). It’s true that “apple” and “manzana” are equivalent, but it’s tough see using a “letter-by-letter” comparison. It’s easier to trace the word history to the core concept and see how they’re the same. Similarly, it’s tough to jump directly from rectangular to polar results without moving through the intermediate concept.

That said, we can still try!

Intuitively, I think the key is realizing that “weighted parallelism” must come from both components that *could* be parallel. In your last example, the “Hey, I need to add ay*by” is really “Hey, I need to add any *possible* contribution from ay*by” (i.e., if you decided to choose a different frame where |b| = by, then the entire contribution would be coming from ay*by).

More mechanically, the law of cosines lets us express the cosine in terms of the lengths of a, b and the length between them (“c”). The length between them can be rewritten as (a – b)^2 [where a and b are vectors], which reduces to a^2 + b^2 – 2 (a.b), and this last component (a.b) gives us the magic “ax*bx + ay*by” term. This argument may be a bit circular (using the dot product during a proof of the meaning for the dot product), I’d like to dive into it more as well! Check out the “vector formulation” on this article: http://en.wikipedia.org/wiki/Law_of_cosines

Hope this helps! :)

Kalid,

thanks a lot for your answer!

Yes, I also thought that when you choose a frame where |b|=by the whole contribution comes from ay*by, and therefore it is reasonable to somehow combine ax*bx and ay*by, but I couldn’t figure out how come the combination turns out to be so simple (just summing the two components). There’s probably something simple I’m failing to see :)

Thanks for the hint on the law of cosines, it might indeed be the key to answer my question, although the circularity you already spotted out makes me feel suspicious about it. I’ll need to give it some deeper thought! :)

By the way, I love the way you reason in terms of analogies. Indeed a letter-by-letter comparison of “apple” and “manzana” is not the way to match the two terms, and their semantic association to the concept of apple is fundamental. However, I think the letter-by-letter comparison has also something to give, but I need to change the analogy in order to explain in what sense.

The way I look at math is as a wild island I have to explore. In the beginning, I have no map of the territory. As I proceed with the exploration and discover interesting places, I draw my own map by putting crosses (theorems or concepts) and tracing paths between them (proofs or reasoning lines), trying to find ways that connect all the interesting places.

There might be several ways to get to the same place from the same starting point, and it might not be obvious that some paths lead to the same place. Some might be longer and easier, some harder and shorter. Sometimes I feel surprised by ending up in the same place as I did by following another path; sometimes I’m surprised to end up somewhere different than I thought. That makes me realize I don’t know the territory.

Now given a good map with places and paths (theorems and formal proofs), I am normally (not always!) able to get from anywhere to anywhere, but that’s not enough to say I “know” the island. Instead, if I were able to orienteer well enough to be aware at any time where I am as I follow one path, and where I would be if I would be following an alternative one, that would make me feel confident that I know the territory, as if I could watch the whole island from above. Besides, that’s what I need if I want discover new places or find new ways of my own.

I like this analogy because I do not think mathematical concepts are conceived in the first place as “useful ideas to represent mathematically” (like “weighted parallelism”), and then given a mathematical formulation. I rather believe that they come up as people explore the island, and only after, when it’s clear that these places are frequently visited and somehow useful, they are given a name and (when possible) an interpretation. Like putting a cross and an intuitive label on the map.

But the intuitive label is a sign for tourists, and knowing where the useful places are and what paths lead to them is not enough for an explorer; I want to know where I am on the island as I follow each path, what obstacles I’m going to encounter, what deviations I will likely need to take, and so on. That makes me able to trace a step-by-step (letter-by-letter) comparison of any path.

Anyway, I’ll try to follow the path you pointed me to with the law of cosines and let you know if that will make my map richer! I look forward to your next article :)

Regards,

Andrea

Hi Andrea,

No problem, I love these types of discussions! That’s a good question about the sum (vs product, let’s say). My intuition tells me 1) the combination must be symmetric [i.e., x and y should contribute equal amounts] and 2) the combination must be “independent” (if x contribution is zero, y can still have a contribution). The 2 ways (offhand) I can think of are x + y or x*y, but x*y fails the second test. But this might me trying to scramble and find something which fits :).

Glad you’re enjoying the analogies — I find if I put problems in terms of familiar things, I can see deeper relationships. And you’re right, the letter-by-letter comparison isn’t quite right, because there *is* some overlap. A better example may be “university” and “universidad” (Spanish), where there seems to be some structure (and many English words can be translated to Spanish by replacing “ity” with “idad”, i.e. unity => unidad).

I really like your map analogy, and your intuitive test of whether you’ve understood a topic. I actually use that one as an example of rote learning vs. insight — if you memorize directions, that’s rote learning, but if you remember the map (and therefore can derive the directions as needed), that’s intuition. There may be deeper levels, i.e. knowing several paths to the same goal, and what would happen if you had taken those alternates. And I agree about us putting the labels *after* we’ve figured out some interesting, emergent concept — sometimes studying the labels directly seems backwards!

Really appreciate the discussion, hope the law of cosines sheds some light for you (I still don’t have a firm intuition for it, so its derivation is mostly symbol manipulation for me).

Take care,

-Kalid

Hi Khalid,

I’m really impressed that someone is doing this intuition thing. I always wanted to write some articles on the necessity of understanding Math physically; guess you beat me to it =P.

I don’t quite understand why one adds all the resolved vectors at the end. When one has resolved all the vectors, shouldn’t it yield a resultant vector? And wouldn’t the magnitude of the resultant vector be the dot product? In which case, one would have sqrt((ayby)^2 + (axbx)^2). I think the confusion is why one can add the magnitude of a vector to another which is perpendicular.

Thanks,

Brian

@Brian: Glad you’re enjoying it. Don’t let this site stop you though, I want to read your insights too (love seeing how people approach things).

Great question on why we don’t end up with a vector. There’s a few ways to interpret how one vector “overlaps” with another. The dot product is a horrible name because it doesn’t tell anything *about* the overlap, just the name of the symbol used (woohoo). The idea is to say “How much does vector A push in the direction of the vector B?”. There is an implicit vector here (the reference vector, B), so the operation just gives the size.

The adding of components is basically breaking each vector (A and B) into parts, and seeing how each part might possibly push in the same direction. It turns out, only the horizontal parts can push in the same direction (ax * bx) and only the vertical parts can push in the same direction (ay * by). The combined “push” is the sum of how much each axis is pushing. Hope this helps!

Dude, I love your site! The way you explain math reads like prose, yet I still feel like I’m learning so much more than I from my classes/textbooks. You are a gifted teacher. I think you need to author a revolutionary math textbook that does not sacrifice clarity in the name of rigor. Your website is the solution to the problem posed in Lockhart’s Lament. Keep doing what you are doing, I only wish I could have discovered this 10 years ago. I probably still would have preferred video games, but who knows.

@Avo: Thanks so much. That’s exactly what I wanted — math as a story, something interesting to follow, not a dry series of steps. Really appreciate the encouragement!

Heh, it’s not a choice between learning and video games, you can do both :).