Does .999… = 1? The question invites the curiosity of students and the ire of pedants. A famous joke illustrates my point:

A man is lost at sea in a hot air balloon. He sees a lighthouse approaching in the fog. “Where am I?” he shouts desperately through the wind. “You’re in a balloon!” he hears as he drifts off into the distance.

The response is correct but unhelpful. When people ask about 0.999… they aren’t saying “Hey, could you find the limit of a convergent series under the axioms of the real number system?” (Really? Yes, Really!)

No, there’s a broader, more interesting subtext: What happens when one number gets infinitely close to another?

It’s a rare thing when people wonder about math: let’s use the opportunity! Instead of bluntly offering technical definitions to satisfy some need for rigor, let’s allow ourselves to explore the question.

Here’s my quick summary:

- The meaning of 0.999… depends on our assumptions about how numbers behave.

- A common assumption is that numbers cannot be “infinitely close” together — they’re either the same, or they’re not. With these rules, 0.999… = 1 since we don’t have a way to represent the difference.

- If we allow the idea of “infinitely close numbers”, then yes, 0.999… can be less than 1.

Math can be about questioning assumptions, pushing boundaries, and wondering “What if?”. Let’s dive in.

Do Infinitely Small Numbers Exist?

The meaning of 0.999… is a tricky concept, and depends on what we allow a number to be. Here’s an example: Does “3 – 4” mean anything to you?

Sure, it’s -1. Duh. But the question is only simple because you’ve embraced the advanced idea of negatives: you’re ok with numbers being less than nothing. In the 1700s, when negatives were brand new, the concept of “3-4” was eyed with great suspicion, if allowed at all. (Geniuses of the time thought negatives “wrapped around” after you passed infinity.)

Infinitely small numbers face a similar predicament today: they’re new, challenge some long-held assumptions, and are considered “non-standard”.

So, Do Infinitesimals Exist?

Well, do negative numbers exist? Negatives exist if you allow them and have consistent rules for their use.

Our current number system assumes the long-standing Archimedean property: if a number is smaller than every other number, it must be zero. More simply, infinitely small numbers don’t exist.

The idea should make sense: numbers should be zero or not-zero, right? Well, it’s “true” in the same way numbers must be there (positive) or not there (zero) — it’s true because we’ve implicitly excluded other possibilities.

But, it’s no matter — let’s see where the Archimedean property takes us.

The Traditional Approach: 0.999… = 1

If we assume infinitely small numbers don’t exist, we can show 0.999… = 1.

First off, we need to figure out what 0.999… means. Most mathematicians see the problem like this:

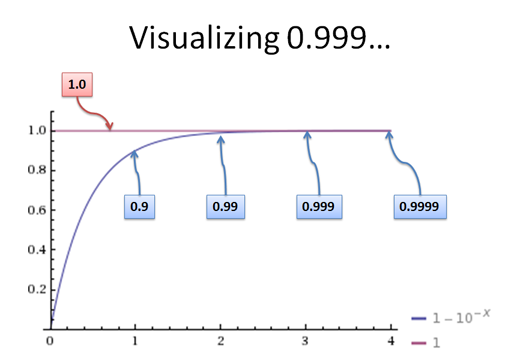

- 0.999… represents a series of numbers: 0.9, 0.99, 0.999, 0.9999, and so on

- The question: does this series get so close (converge) to a result that we cannot tell it apart?

This is the reasoning behind limits: Does our “thing to examine” get so darn close to another number that we can’t tell them apart, no matter how hard we try?

“Well,” you say, “How do you tell numbers apart?”. Great question. The simplest way to compare is to subtract:

- if a – b = 0, they’re the same

- if a – b is not zero, they’re different

The idea behind limits is to find some point at which “a – b” becomes zero (less than any number); that is, we can’t tell the “number to test” and our “result” as different.

The Error Tolerance

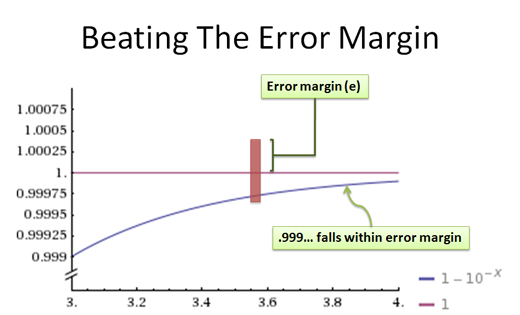

It’s still tough to compare items when they take such different forms (like an infinite series). The next clever idea behind limits: define an error tolerance:

- You give me your tolerance for error / accuracy level (call it “e”)

- I’ll see whether I can get the two things to fall within that tolerance

- If so, they’re equal! If we can’t tell them apart, no matter how hard we try, they must be the same.

Suppose I sell you a raisin granola bar, claiming it’s 100 grams. You take it home, examine the non FDA-approved wrapper, and decide to see if I’m lying. You put the snack on your scale and it shows 100 grams. The scale is accurate to 1 gram. Did I trick you?

You couldn’t know: as far as you can tell, within your accuracy, the granola bar is indeed 100 grams. Our current problem is similar: I’m selling you a “granola bar” weighing 1 gram, but sneaky me, I’m actually giving you one weighing 0.999… grams. Can you tell the difference?

Ok, let’s work this out. Suppose your error tolerance is 0.1 gram. Then if you ask for 1, and I give you 0.99, the difference is 0.01 (one hundredth) and you don’t know you’ve been tricked! 1 and .99 look the same to you.

But that’s child’s-play. Let’s say your scale is accurate to 1e-9 (.000000001, a billionth of a gram). Well then, I’ll sell you a candy bar that is .999999999999 (only one trillionth of a gram off) and you’ll be fooled again! Hah!

In fact, instead of picking a specific tolerance like 0.01, let’s use a general one (e):

- Error tolerance: e

- Difference: Well, suppose e has “n” digits of precision. Let 0.999… expand until we have a difference requiring n+1 digits of precision to detect.

- Therefore, the tolerance can always be less than e! And the difference appears to be zero.

See the trick? Here’s a visual way to represent it:

The straight line is what you’re expecting: 1.0, that perfect granola bar. The curve is the number of digits we expand 0.999… to. The idea is to expand 0.999… until it falls within “e”, your tolerance:

At some point, no matter what you pick for e, 0.999… will get close enough to satisfy us mathematically.

(As an aside, 0.999… isn’t a growing process, it’s a final result on its own. The curve represents the idea that we can approximate 0.999… with better and better accuracy — this is fodder for another post).

With limits, if the difference between two things is smaller than any margin we can dream of, they must be the same.

Assuming Infinitesimals Exist

This first conclusion may not sit well with you — you might feel tricked. And that’s ok! We seem to be ignoring something important when we say that 0.999… equals 1 because we, with our finite precision, cannot tell the difference.

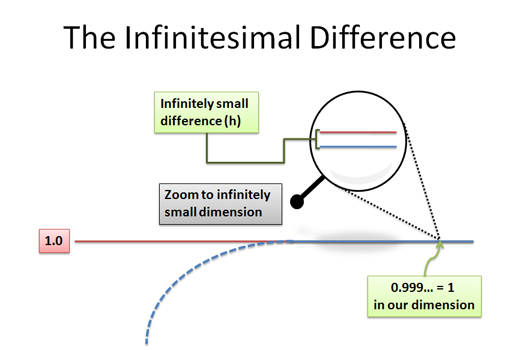

Newer number systems have developed the idea that infinitesimals exist. Specifically:

- Infinitely small numbers can exist: they aren’t zero, but look like zero to us.

This seems to be a confusing idea, but I see it like this: atoms don’t exist to cavemen. Once they’ve cut a rock into grains of sand, they can go no further: that’s the smallest unit they can imagine. Things are either grains, or not there. They can’t imagine the concept of atoms too small for the naked eye.

Compared to other number systems, we’re cavemen. What we call “tiny numbers” are actually gigantic. In fact, there can be another “dimension” of numbers too small for us to detect — numbers that differ only in this tiny dimension look identical to us, but are different under an infinitely powerful microscope.

I interpret 0.999… like this: Can we make a number a bit less than 1 in this new, infinitely small dimension?

Hyperreal Numbers

Hyperreal numbers are one system that uses this “tiny dimension” to examine infinitely small numbers. In this, infinitesimals are usually called “h”, and are considered to be 1/H (where big H is infinity).

So, the idea is this:

- 0.999… < 1 [We’re assuming it’s allowed to be smaller, and infinitely small numbers exist]

- 0.999… + h = 1 [h is the infinitely small number that makes up the gap]

- 0.999… = 1 – h [Equivalently, we can subtract an infinitely small amount from 1]

So, 0.999… is just a tiny bit less than 1, and the difference is h!

Back to Our Numbers

The problem is, “h” doesn’t exist back in our macroscopic world. Or rather, h looks the same as zero to us — we can’t tell that it’s a tiny atom, not the lack of any matter altogether. Here’s one way to visualize it:

When we switch back to our world, it’s called taking the “standard part” of a number. It essentially means we throw away all the h’s, and convert them to zeroes. So,

- 0.999… = 1 – h [there is an infinitely small difference]

- St(0.999…) = St(1 – h) = St(1) – St(h) = 1 – 0 = 1 [And to us, 0.999… = 1]

The happy compromise is this: in a more accurate dimension, 0.999… and 1 are different. But, when we, with our finite accuracy, try to describe the difference, we cannot: 0.999… and 1 look identical.

Lessons Learned

Let’s hop back to our world. The purpose of “Does 0.999… equal 1?” is not to spit back the answer to a limit question. That’s interpreting the query as “Hey, within our system what does 0.999… represent?”

The question is about exploration. It’s really, “Hey, I’m wondering about numbers infinitely close together (.999… and 1). How do we handle them?”

Here’s my response:

- Our idea of a number has evolved over thousands of years to include new concepts (integers, decimals, rationals, reals, negatives, imaginary numbers…).

- In our current system, we haven’t allowed infinitely small numbers. As a result, 0.999… = 1 because we don’t allow there to be a gap between them (so they must be the same).

- In other number systems (like the hyperreal numbers), 0.999… is less than 1. Here, infinitely small numbers are allowed to exist, and this tiny difference (h) is what separates 0.999… from 1.

There are life lessons here: can we extend our mental model of the world? Negatives gave us the conception that every number can have an opposite. And you know what? It turns out matter can have an opposite too (matter and antimatter annihilate each other when they come in contact, just like 3 + (-3) = 0).

Let’s think about infinitesimals, a tiny dimension beyond our accuracy:

- Some theories of physics reference tiny “curled up” dimensions which are embedded into our own. These dimensions may be infinitely small compared to our own — we never notice them. To me, “infinitely small dimensions” are a way to describe something which is there, but undetectable to us.

- The physical sciences use “significant figures” and error margins to specify the inherent inaccuracy of our calculations. We know that reality is different from what we actually measure: infinitesimals help make this distinction explicit.

- Making models: An infinitely small dimension can help us create simple but accurate models to solve problems in our world. The idea of “simple but accurate enough” is at the heart of calculus.

Math isn’t just about solving equations. Expanding our perspective with strange new ideas helps disparate subjects click. Don’t be afraid wonder “What if?”.

Appendix: Where’s the Rigor?

When writing, I like to envision a super-pedant, concerned more with satisfying (and demonstrating) his rigor than educating the reader. This mythical(?) nemesis inspires me to focus on intuition. I really should give Mr. Rigor a name.

But, rigor has a use: it helps ink the pencil-lines we’ve sketched out. I’m not a mathematician, but others have written about the details of interpreting 0.999… and 1 or less than 1:

“So long as the number system has not been specified, the students’ hunch that .999… can fall infinitesimally short of 1, can be justified in a mathematically rigorous fashion.”

My goal is to educate, entertain, and spread interest in math. Can you think of a more salient way to get non-math majors interested in the ideas behind analysis? Limits aren’t going to market themselves.

Other Posts In This Series

- A Gentle Introduction To Learning Calculus

- Understanding Calculus With A Bank Account Metaphor

- Prehistoric Calculus: Discovering Pi

- A Calculus Analogy: Integrals as Multiplication

- Calculus: Building Intuition for the Derivative

- How To Understand Derivatives: The Product, Power & Chain Rules

- How To Understand Derivatives: The Quotient Rule, Exponents, and Logarithms

- An Intuitive Introduction To Limits

- Why Do We Need Limits and Infinitesimals?

- Learning Calculus: Overcoming Our Artificial Need for Precision

- A Friendly Chat About Whether 0.999... = 1

- Analogy: The Calculus Camera

- Abstraction Practice: Calculus Graphs

Leave a Reply

167 Comments on "A Friendly Chat About Whether 0.999… = 1"

My Calculus prof proved this for us in class.

Let N = 0.999…

Assume N = 1, now multiply both sides by 10

10N = 10, now subtract 9 from both sides

N = 1

I *think* that’s how he did it.

Why “0.999 = 1” is counter-intuitive:

If you have “0.99” instead of “0.9”, it means that you are one step closer to 1, as close as the 10-digit-notation allows in one step, *but without reaching 1*. If you add another 9 and arrive at “0.999”, you have again stepped as close to 1 as you could in one step, but without reaching 1.

Even if you would do this an infinite amount of times, *every step* would have the same rule: “… *without* reaching 1”.

The problem with this argument is that one is encountered with not reaching 1 if one has a finite number of digits. That is not the case with 0.9999… it has infinite amount of digits, and by that definition it can and does reach 1. The number is not a process.

Last year I wrote about it (albeit in Italian) at

http://xmau.com/mate/art/0-999999a.html and http://xmau.com/mate/art/0-999999b.html . My line of reasoning is more or less like yours :-) (oh yeah, there’s also http://xmau.com/mate/art/0-999999c.html where I wonder about the difference between 1.00 and 1, and ramble about the measuration errors!)

Ever head of this guy Cauchy? He might wanna have a word with you.

i really like your explanations of tricky math concepts. do keep posting more good stuff like this. looking forward to your post on approximating functions :)

also from what i understand, dark matter is different from anti-matter. When matter meets anti-matter they annihilate and release energy. Anti-matter is well understood while dark matter is not. It is simply conjectured to exist to explain the speeding up of the expansion of the universe when it should really be slowing down. So you can make that (infinitesimal) correction into your excellent post :)

I think you meant anti-matter where you said “Dark matter destroys regular mass when they come in contact […])

The hyperreal case is a little bit more subtle than that.

See, the object 0.999…, as you understand it, doesn’t quite exist there. The hyperreals add infinitesimals to the real line by also adding infinitely large numbers, including infinitely large integers. And since {0, 0.9, 0.99, 0.999, …} is a sequence on the positive integers, it gets a lot more terms when it gets embedded into the hyperreal system; it becomes a hyper-sequence, for lack of a better term. Canonically it corresponds to a hyper-sequence whose length is unbounded even in the hyperreals, and still has 1 as a limit.

Now, we could also look at the hyper-sequence which started the same way, but stopped getting bigger at some hyper-integer w. The difference between 1 and that limit would be 10-w, which is positive in our system. (This is what the arXiv paper you cited is talking about.) There are many sequences which are increasing like 0.999… on the standard integers, then take a constant value x on most of the nonstandard integers, but x could be anything.

So the real problem is that 0.999… isn’t well-defined in the hyperreals—it doesn’t really equal anything. I know of no context where 0.999… has a clear resolution and it isn’t 1.

(And for the record, we aren’t rigorous because we like to be. We’re rigorous because the subject demands rigor. Intuition fails a lot.)

sorry, 10-w should be 10^(-w)

some bla guy — I think you’re right about why 0.999… = 1 is a counter-intuitive fact, but there’s an easy counter. Each finite term 0.99…9 is larger than the previous term; so the “infinite term” 0.999… is larger than all the finite terms. So the fact that 1 is larger than 0.99…9 isn’t an obstacle; in fact, it’s a requirement.

Oh, since I’m here anyway:

Aaron — Any proof that assumes N = 1 to prove N = 1 is dead in the water. The usual “proof” goes like this: If (1) N = 0.999…, then (2) 10N = 9.999…; subtract (1) from (2) to get (3) 9N = 9, from which N = 1. I say “proof” because first you have to establish that arithmetic with infinite expansions makes sense, and it’s usually easier to do some other proof instead.

ram — Your broad point is correct, but what you’ve described is “dark energy”. Dark matter is mass that we know must exist, because of its gravitational effect on visible objects near it, but can’t see, because it doesn’t interact with the electromagnetic field. I’m pretty sure it would actually slow the expansion of the universe, but don’t quote me on that.

I always use this quick explanation to the layman:

1/3 (one third) can be represented by 0.333…

If you take each thirds and add them up (0.333.. + 0.333… + 0.333…) they add up to 1.0, not 0.999…

A Friendly Chat About Whether 0.999… = 1…

Via reddit I stumbled upon this site which talks about something called hyperreal numbers and claims that in this theory, the equation 0.999… = 1 is false.For those who follow the internet, the question of whether 0.999…=1 has come up a quadbrazill…

A very simple way to solve this:

Take two numbers 3 and 5; now, to see if they are equal we can try to find a number between them. Well, a number like 4 or 3.75 is between.

So, now let’s take 0.999… and 1.0. Can you find a number that is between an infinitely repeating set of 9’s before it goes to 1? No, there is no number between 0.999… and 1. If you truly figure 0.999… as an infinite string of 9’s then there is nothing before you would have to round up to 1.

However, for practical purposes we have to round to a finite number. A finite string of 0.9999’s is only equal to one because humans can’t work/comprehend an infinite string of 9’s.

You object that, when asked whether .99999…=1, we view .99999… within “our system”, whatever that means. Well, of course we do! How else could we possibly interpret the question or try to answer it?

When someone asks whether .999… equals 1, they are most certainly asking within the context of the real numbers. Switching gears and trying to interpret the question within the hyperreals is as arbitrary and evasive as choosing to instead view it in the p-adics, where another different (but equally valid) answer could be given.

This is silly. This “argument” really needs to be put to rest. Mathematical rigor exists precisely for this reason.

Ah, I knew this would this would invite the curiosity of students and the ire of pedants! :)

@Aaron: Great question. These types of proofs make assumptions about how addition and subtraction would work with these infinite decimals (does 9 * 0.999 = 8.999…?), but they do work for the regular number system (see http://math.fau.edu/Richman/HTML/999.htm).

@some bla guy: I’d love to have a word with Cauchy — I bet he’d be interested in learning about new number systems that can rigorously approach the same problems differently!

@mau: Neat — I’ll have to see how well Google translate does at math :).

@ram: Whoops, thanks for the correction! Yes, I meant anti-matter :).

@Chad: Great points, thanks for the discussion! I guess it depends on the meaning of 0.999…, which is indeed ambiguous. I think the better phrasing may be “The hyperreal number uH=0.999…;…999000… with H-infinitely many 9s, for some infinite hyperinteger H, satisfies a strict inequality uH < 1" (from Wikipedia).

I think the higher meta-point is figuring out the question behind the question — the layman isn't asking about 0.999… as constructed in the real number system. They want to know what happens when one number gets "infinitely close" to another — can this be represented? 0.999… is the most convenient form of this question (also see 1/infinity — does this equal 0? Yes, if you take the limit approach, no if you take the hyperreal).

@haileris: Does 1/3 = .333.. exactly, or is 0.333… different at the infinitely small level? :)

@Adam: Great point — because our current number system cannot represent the difference between 0.999… and 1 (there's no number in-between), in our current system they are equal. However, other systems allow it, so I take the approach of "it depends".

@Jeff: Thanks for dropping in, but I disagree that it needs to be put to rest. Transform the problem: if it's 1600 and someone asks 1600s Jeff what does sqrt(-1) mean, what do you say? That they are asking this question in the context of the real numbers, and the answer is undefined? How else do you answer it?

The alternative is to explore a new number system (complex numbers, hyperreal numbers) and see if it has interesting properties. You can't take the question at face value, it's really about exploring the nature of infinitely small numbers.

Hailis has the answer and yes, Kalid, 1/3 _does_ equal 0.333… The numerator is really 1.000… and the division continues ad infinitum. To say that 0.999… does not equal 1 is to say that neither 3/3 nor 9/9 equal 1. I would love to hear your explanation as to why the rules for reducing 9/9 are different than those for reducing 8/8 or, for that matter, 1/1.

Additionally, I don’t think there is much assumption involved when considering how addition might work with these particular infinitely repeating decimals. Try adding 1/3 and 1/7. When expressed as a decimal, each has an infinitely repeating sequence; yet we can identify a very specific and uncontroversial answer: 10/21.

If we were discussing 0.12341234… it might be a different story; that number is not rational. 0.989898… comes close to 1 but never touches, which makes it an interesting candidate for the tolerance and accuracy portions of this discussion. But nobody is proposing that 0.989898… equals 1.

0.9… is indeed a special case, but it is not the number-line equivalent to infinitely-close-but-not-touching (like the way my fingers don’t actually touch my keyboard as I type – there is a tiny gap between the atoms). 0.9… is 9 * 1/9. It’s a concept we can imagine and denote, but it doesn’t really exist as a unique number. It is, in truth, 1.

About the “ire of pedants” … There is no point discussing infinity unless you are being pedantic and rigorous.

Since we’re wondering out loud, I will say that this kind of number theory issue makes me wonder whether complex number are more real than real numbers.

@Ogre_Kev: I agree that in the current real number system, .333… = 1/3. But what this means is this:

“The infinite sequence (.3, .33, .333, .3333…) converges to the limit 1/3”, which is another way of saying “We can make an element of (.3, .33, .333…) as close to 1/3 as we wish”.

You might want to check out http://math.fau.edu/Richman/HTML/999.htm:

“Perhaps the situation is that some real numbers can only be approximated, like the square root of 2, whereas others, like 1, can be written exactly, but can also be approximated. So 0.999… is a series that approximates the exact number 1. Of course this dichotomy depends on what we allow for approximations. For some purposes we might allow any rational number, but for our present discussion the terminating decimals—the decimal fractions—are the natural candidates. These can only approximate 1/3, for example, so we don’t have an exact expression for 1/3”.

So, as long as we stay in the real number system, 1/3 is the limit of .333… [which is fine, but we don’t have to stay in the real number system; others can capture the idea of what we mean when we say infinitely close].

As a side comment: if 3/10 is not 1/3, and 33/100 is not 1/3, at what point does another digit make it exactly 1/3? This is a bit like Zeno’s paradoxes, which have not been fully resolved :). The meta-point is that we can make that sequence as close to 1/3 as we need, which in the real number system means they are equal in the limit.

@Igor: I think it’s possible to sketch out ideas intuitively and return with rigor to cement the foundation — Calculus developed this way, did it not?

@Michael: Great question — I think all numbers may be equal abstractions of the mind. The real number system may be “less real” because it’s more limited than others.

“The infinite sequence (.3, .33, .333, .3333…) converges to the limit 1/3″, which is another way of saying “We can make an element of (.3, .33, .333…) as close to 1/3 as we wish”.

Not exactly. It means that most of the elements of {.3, .33, .333, …} are close to 1/3. Here “most” means “all but finitely many”, and “close” means “within any predetermined positive distance”. That’s how you get that limits are uniquely determined. The terms of a sequence can’t all be clustered around a and all be clustered around b.

“As a side comment: if 3/10 is not 1/3, and 33/100 is not 1/3, at what point does another digit make it exactly 1/3?”

There isn’t one. The limit of the sequence is not (generally) a term of the sequence. See comment #9 (my response to “some bla guy”).

“This is a bit like Zeno’s paradoxes, which have not been fully resolved.”

Sure they have, in large part by the limit concept. (If they hadn’t been resolved, even Newtonian physics wouldn’t be possible.)

“The meta-point is that we can make that sequence as close to 1/3 as we need, which in the real number system means they are equal in the limit.”

Limits are unique in the hyperreals as well. You might have infinitesimal separations, but you also have infinitesimal resolving power (if that makes sense). Be careful here: a lot of “standard” sequences with limits, such as {.3, .33, .333, …}, don’t have limits in the hyperreals unless you make some canonical extension to a hypersequence; if you do that, the extension will still have the original limit (in this case 1/3).

“The real number system may be “less real” because it’s more limited than others.”

Not so much. See, the construction that Robinson applied to the reals to get the hyperreals can also be applied to the hyperreals. If we call the result the hyperhyperreals, well, we can apply the construction again, to get the (hyper)^3-reals, and so forth. Each is “less limited” than the previous, but none of these can be the “real” system, because each is “more limited” than the next. But really, none of these is more limited than the others, because the same expressible facts are true in all of them.

To me, the “real” system is the simplest system which easily models the phenomena we’re interested in—which in this case is the ordinary real line.

By the way, you might read Fred Richman’s article a bit more carefully. The system he creates is one in which 0.99… and 1 resolve differently, but it’s also one in which negation and multiplication don’t make sense. So, fair enough, such systems exist, but I wouldn’t want to work in any of them.

@Chad: Thanks for the info & discussion! I’m not rigorously versed in the details, so am learning as I go along :). As far as how “Does 0.999… = 1?” is interpreted by most mathematicians, here’s my guess:

* 0.999… means “continue .999 in the obvious way”

* It is not common to define real numbers as a sequence of decimal digits (though not impossible). We prefer to construct a real number as a Cauchy Sequence of rationals (for example).

* 0.9, 0.99, 0.999, … is the obvious Cauchy Sequence representing that infinite decimal expansion

* Now that we have a sequence, I see you are using the equals operator. Like a compiler doing integer to floating point conversion, I’m going to “cast” the sequence into a real number (if possible) by taking the limit of the sequence, and compare that to 1.

So, as long as we’re staying within the real number system, 0.999… interpreted this way means 1 (and .333… = 1/3). But is that the only interpretation? If we interpret 0.999… as possibly referring to a hyperreal number (1 – h) then what conclusions can we draw?

I think there’s a notion of an “infinitely small gap” that’s we cannot describe with real numbers that leads to interesting approaches.

It’s interesting to me that early physics was developed with the use of “non-rigorous” infinitesimals; clearly there is a concept there (being able to manipulate dy and dx independently, not taking them as an operator) that was not captured in the current real number system. If there’s a number system (the hyperreals) which can explicitly capture that idea (vs. breaking the rules in the current one) I think it’s more useful for that purpose.

So, by “limited” I don’t mean less capable, but not as innately useful/expressive (you probably know, but most programming languages are equally powerful (Turing Complete) but differ vastly in how useful/usable they are). I agree about the hyper^N reals, I had suspected that too :). But I don’t know of situations where we’re trying to solve problems by relying on 2nd-order infinitesimals and having to work around it in the current one — if we were, I’d suggest that system as the most expressive.

I appreciate the clarification on the Richman piece — he does say it’s an open problem. I’m interested in going through http://www.jstor.org/pss/2316619 which expounds on infinitesimals and their representations further (http://en.wikipedia.org/wiki/0.999…#Infinitesimals).

No problem—this whole discussion is helping me clarify a lot of these ideas as well.

Here’s the thing: if you want to do calculus with infinitesimals, first you have to do arithmetic with them. And that leads to problems, if you also try to do arithmetic with infinite decimal expansions. If you want .99… to resolve to 1 – h, with h infinitesimal but nonzero, then does 1.99… resolve to 2 – h or 2 – 2h? Both make sense. (And don’t say that 2 x .99… is 1.99…8. That has an 8 in the “last place”, and there is no last place.)

Interestingly, though, if we let go of decimal expansions and consider arbitrary sequences of numbers, we get awfully close to hyperreals. In one “hyper” construction, the hyperreals are precisely the sequences of reals modulo a certain equivalence relation. Sequences which have equal terms at most indices are considered equal, and statements which are true at most indices are considered true.

For example, {1,2,3,…} represents an infinitely large number (on account of most natural numbers are larger than x for any fixed real number x); call this number w. Its reciprocal sequence {1,1/2,1/3,…} represents the infinitesimal 1/w, and {.1, .01, .001, …} represents the infinitesimal 10^(-w) (which we’ll call h), and {.9, .99, …} represents 1 – h; but {0, .9, .99, …} represents 1 – 10h, so there’s some nasty ambiguity in (.99…). Also {1.9, 1.99, 1.999, …} represents 2 – h, and {1.8, 1.98, 1.998, …} represents 2 – 2h. So we can get enough infinitesimals to do calculus, but we have to go beyond decimal expansions to do it.

“We prefer to construct a real number as a Cauchy Sequence of rationals (for example).”

Since we’re being technical here anyway: Cauchy sequences of rationals only represent real numbers. We still have to specify when two Cauchy sequences represent the same real number; and {a_n} and {b_n} do this precisely when {a_n – b_n} converges to 0 in the rationals. In particular, {1, 1, 1, …} and {.9, .99, .999, …} represent the same real number (which is 1), because {.1, .01, .001, …} converges to 0.

I’ll stand by my original position, more or less: there’s no unambiguous way to interpret the infinite decimal expansion of a fraction x as “infinitesimally less” than x, and still be able to do arithmetic with those decimal expansions. The Wikipedia article you cite backs me up on this, at the end of its introduction: “[S]ome settings contain numbers that are ‘just shy’ of 1[, but] these are generally unrelated to 0.999…”.

“So, by “limited” I don’t mean less capable, but not as innately useful/expressive (you probably know, but most programming languages are equally powerful (Turing Complete) but differ vastly in how useful/usable they are)”.

That’s exactly what I’m talking about. The statements which are true on the real numbers are exactly those which are true on the hyperreals — if you’re careful about how you interpret those statements. Nonstandard analysis—that is, analysis with the hyperreals—hasn’t really caught on, and I suspect it’s because proper interpretation is just as difficult to deal with as epsilon-delta argument, with no real gain.

It might be useful to write a nonrigorous calculus textbook based on nonstandard analysis; in fact, I think it’s been done.

I am not sure if this is a statement of derision or fact. The idea that the negatives “wrap around” is not that far fetched. The 1 point compactification of the reals is homeomorphic with the circle. In that context it makes perfect sense to think of the negatives as wrapping around at infinity. I allow my students to use the analogy frequently with asymptotes.

I have a few questions. They may sound like objections, but they’re definitely in question form because I am admittedly slightly out of my depth here. These are the main trip-ups that are keeping me from wrapping my mind around what you’re saying:

How does the fact that we’re working all this stuff about 0.999… in base 10 effect the issue? If it’s not equal to 1, then it’s obviously not a rational number, but can it be expressed, or even approximated, in other bases? In this alternate number system you propose, is 0.777… in base 8 equal to 0.999… in base 10?

And I know this has been touched on in the comments already, but I’m still wondering about the (1/3)*3=1 angle. I take it that in this new system, (1/3) wouldn’t be equal to 0.333…, for the same reasons as with 0.999… and 1. So does this mean that (1/3) cannot be calculated?