Javascript is becoming increasingly popular on websites, from loading dynamic data via AJAX to adding special effects to your page.

Unfortunately, these features come at a price: you must often rely on heavy Javascript libraries that can add dozens or even hundreds of kilobytes to your page.

Users hate waiting, so here are a few techniques you can use to trim down your sites.

(Check out part 2 for downloadable examples.)

Find The Flab

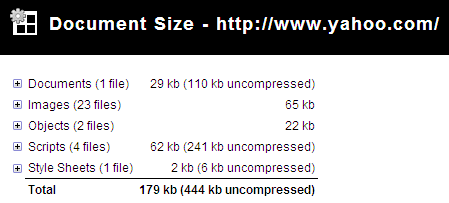

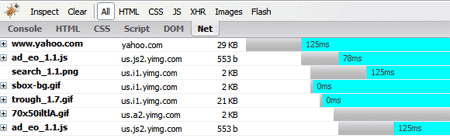

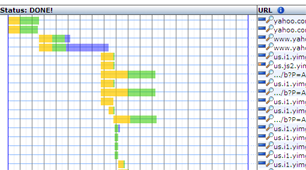

Like any optimization technique, it helps to measure and figure out what parts are taking the longest. You might find that your images and HTML outweigh your scripts. Here’s a few ways to investigate:

1. The Firefox web-developer toolbar lets you see a breakdown of file sizes for a page (Right Click > Web Developer > Information > View Document Size). Look at the breakdown and see what is eating the majority if your bandwidth, and which files:

2. The Firebug Plugin also shows a breakdown of files – just go to the “Net” tab. You can also filter by file type:

3. OctaGate SiteTimer gives a clean, online chart of how long each file takes to download:

Disgusted by the bloat? Decided your javascript needs to go? Let’s do it.

Compress Your Javascript

First, you can try to make the javascript file smaller itself. There are lots of utilities to “crunch” your files by removing whitespace and comments.

You can do this, but these tools can be finnicky and may make unwanted changes if your code isn’t formatted properly. Here’s what you can do:

1. Run JSLint (online or downloadable version) to analyze your code and make sure it is well-formatted.

2. Use YUI Compressor to compress your javascript from the command line. There are some online packers, but the YUI Compressor (based on Rhino) actually analyzes your source code so it has a low chance of changing it as it compresses, and it is scriptable.

Install the YUI Compressor (it requires Java), then run it from the command-line (x.y.z is the version you downloaded):

java -jar yuicompressor-x.y.z.jar myfile.js -o myfile-min.js

This compresses myfile.js and spits it out into myfile-min.js. Rhino will remove spaces, comments and shorten variable names where appropriate.

Using Rhino, I pack the original javascript and deploy the packed version to my website.

Debugging Compressed Javascript

Debugging compressed Javascript can be really difficult because the variables are renamed. I suggest creating a “debug” version of your page that references the original files. Once you test it and get the page working, pack it, test the packed version, and then deploy.

If you have a unit testing framework like jsunit, it shouldn’t be hard to test the packed version.

Eliminating Tedium

Because typing these commands over and over can be tedious, you’ll probably want to create a script to run the packing commands. This .bat file will compress every .js file and create .js.packed:

compress_js.bat:

for /F <span class="tex-inline-html" alt="F in ('dir /b *.js') do java -jar custom_rhino.jar -c ">F in ('dir /b ·.js') do java -jar custom_rhino.jar -c </span>F > %%F.packed 2>&1

Of course, you can use a better language like perl or bash to make this suit your needs.

Optimize Javascript Placement

Place your javascript at the end of your HTML file if possible. Notice how Google analytics and other stat tracking software wants to be right before the closing </body> tag.

This allows the majority of page content (like images, tables, text) to be loaded and rendered first. The user sees content loading, so the page looks responsive. At this point, the heavy javascripts can begin loading near the end.

I used to have all my javascript crammed into the <head> section, but this was unnecessary. Only core files that are absolutely needed in the beginning of the page load should be there. The rest, like cool menu effects, transitions, etc. can be loaded later. You want the page to appear responsive (i.e., something is loading) up front.

Load Javascript On-Demand

An AJAX pattern is to load javascript dynamically, or when the user runs a feature that requires your script. You can load an arbitrary javascript file from any domain using the following import function:

function $import(src){

var scriptElem = document.createElement('script');

scriptElem.setAttribute('src',src);

scriptElem.setAttribute('type','text/javascript');

document.getElementsByTagName('head')[0].appendChild(scriptElem);

}

// import with a random query parameter to avoid caching

function $importNoCache(src){

var ms = new Date().getTime().toString();

var seed = "?" + ms;

$import(src + seed);

}

The function $import('http://example.com/myfile.js') will add an element to the head of your document, just like including the file directly. The $importNoCache version adds a timestamp to the request to force your browser to get a new copy.

To test whether a file has fully loaded, you can do something like

if (myfunction){

// loaded

}

else{ // not loaded yet

$import('http://www.example.com/myfile.js');

}

There is an AJAX version as well but I prefer this one because it is simpler and works for files in any domain.

Delay Your Javascript

Rather than loading your javascript on-demand (which can cause a noticeable gap), load your script in the background, after a delay. Use something like

var delay = 5;

setTimeout("loadExtraFiles();", delay * 1000);

This will call loadExtraFiles() after 5 seconds, which should load the files you need (using $import). You can even have a function at the end of these imported files that does whatever initialization is needed (or calls an existing function to do the initialization).

The benefit of this is that you still get a fast initial page load, and users don’t have a pause when they want to use advanced features.

In the case of InstaCalc, there are heavy charting libraries that aren’t used that often. I’m currently testing a method to delay chart loading by a few seconds while the core functionality remains available from the beginning.

You may need to refactor your code to deal with delayed loading of components. Some ideas:

- Use SetTimeout to poll the loading status periodically (check for the existence of functions/variables defined in the included script)

- Call a function at the end of your included script to tell the main program it has been loaded

Cache Your Files

Another approach is to explicitly set the browser’s cache expiration. In order to do this, you’ll need access to PHP or Apache’s .htaccess so you can send back certain cache headers (read more on caching).

Rename myfile.js to myfile.js.php and add the following lines to the top:

<?php

header("Content-type: text/javascript; charset: UTF-8");

header("Cache-Control: must-revalidate");

$offset = 60 * 60 * 24 * 3;

$ExpStr = "Expires: " .

gmdate("D, d M Y H:i:s",

time() + $offset) . " GMT";

header($ExpStr);

?>

In this case, the cache will expire in (60 * 60 * 24 * 3) seconds or 3 days. Be careful with using this for your own files, especially if they are under development. I’d suggest caching library files that you won’t change often.

If you accidentally cache something for too long, you can use the $importNoCache trick to add a datestamp like “myfile.js?123456″ to your request (which is ignored). Because the filename is different, the browser will request a new version.

Setting the browser cache doesn’t speed up the initial download, but can help if your site references the same files on multiple pages, or for repeat visitors.

Combine Your Files

A great method I initially forgot is merging several javascript files into one. Your browser can only have so many connections to a website open at a time — given the overhead to set up each connection, it makes sense to combine several small scripts into a larger one.

But you don’t have to combine files manually! Use a script to merge the files — check out part 2 for an example script to do this. Giant files are difficult to edit – it’s nice to break your library into smaller components that can be combined later, just like you break up a C program into smaller modules.

Should I Gzip It?

You probably should. I originally said no, because some older browsers have problems with compressed content.

But the web is moving forward. Major sites like Google and Yahoo use it, and the problems in the older browsers aren’t widespread.

The benefits of compression, often a 75% or more reduction in file size, are too good to ignore: optimize your site with HTTP compression.

All done? Keep learning.

Once you’ve performed the techniques above, recheck your page size using the tools above to see the before-and-after difference.

I’m not an expert on these methods — I’m learning as I go. Here are some additional references to dive in deeper:

- Ajax patterns: Performance Optimization

- Think vitamin: Serving Javascript Fast

- Detailed post on page load time

- Detailed Caching Tutorial and online tool to check your cacheability

Keep your scripts lean, and read part 2 for some working examples.

Leave a Reply

92 Comments on "Speed Up Your Javascript Load Time"

geez man… :)

instead of plugins [web-developer toolbar]&[OctaGate SiteTimer] you can use an another great fx-plugin. this is firebug!

Can you be more specific about the problematic part? Because the great advantage of gzipping javascript is that the code is still readable.

I haven’t had any problems with it so far, but maybe I’m overlooking something.

@magic.ant: Thanks, I forgot about mentioning firebug. It’s great for debugging, but does it break down page sizes as well? (Update: Firebug does show page sizes on the Net tab, not sure how I missed that one! I’ve updated the article.)

@Blaise: The ThinkVitamin article goes into more detail — apparently some versions of Netscape and Internet Explorer (4-6) have issues correctly decompressing gzipped content:

“When loading gzipped JavaScript, Internet Explorer will sometimes incorrectly decompress the resource, or halt compression halfway through, presenting half a file to the client. If you rely on your JavaScript working, you need to avoid sending gzipped content to Internet Explorer.”

There may be workarounds by detecting the browser and returning different code, but I don’t think it’s worth the risk.

However, if you do get it working in all browsers, I’d love to hear about it!

[…] Speed Up Your Javascript Load Time | BetterExplained (tags: javascript optimization performance) […]

Agiliza la carga de tu Javascript…

Una serie de consejos para que la carga de los javacripts no se demoren demasiado y hagan al usuario esperar, algo que no suele gustar, sobre todo ahora que cada vez hay mas efectos y funcionalidades que hacen que las……

Nice article. You can also mention about merging all javascript files into one – this would reduce the no. of HTTP calls (even if the individual files are cached). And if you are pulling JS files from another host, it also makes sense to move those files to your domain, as this would reduce the server lookup time.

[…] I’m happy people are finding the article on javascript optimization useful. But I made giant, horrible mistake. A mistake that befalls many tutorials. […]

Thanks Anand, I had forgotten to mention that. I just put up Part 2 which has a script to combine your files into a javascript library.

HTTP already allows to gzip the payload, so there is really no need to think about gzipping.

[…] Speed Up Your Javascript Load Time | BetterExplained – Javascript is becoming increasingly popular on websites, from loading dynamic data via AJAX to adding special effects to your page… with a price […]

[…] Speed Up Your Javascript Load Time | BetterExplained (tags: JavaScript performance optimization programming webdev) […]

[…] O Javascript tornou-se incrivelmente popular nos sites com o carregamento dinâmico de dados via AJAX para adicionar efeitos especiais à página. Infelizmente, estas características tem um preço: ao usar bibliotecas Javascript, você adiciona dúzias ou eventualmente centenas de kilobites a sua página. Usuários detestam esperar; por isso, aqui você encontra algumas técnicas para usar em seus sites. […]

[…] Kulland???m?z Javascript’lerin sayfa h?z?m?z? ne kadar etkiliyor ve bu etkiyi ne kadar azalt?r?z hakk?nda yaz?lm?? ?ngilizce güzel bir makale. Link […]

[…] Speed Up Your Javascript Load Time Compress Your JS with JSLint, Rhino, etc., place your JS at the end of your HTML (user sees content loading, page looks responsive), load JS on-demand or use a delay (for fast initial page load), cache your files, combine your files (tags: JavaScript Cache) […]

i wrote n uploaded a javascript in my website

that script loads as the page loads

but i want to enter some 30 seconds delay in javascript to load

can i do it

plz help me

Hi Raheel, check out the example in part 2:

/articles/optimized-javascript-example/

There’s a script that loads after 5 seconds — you can change the delay to 30 instead (30 * 1000).

Theres a script that loads after (24 * 1024).

tank’s

http://unidadlocal.com/?buscar=javascript

Kalid, I don’t agree with your comment on not using gzip. Gzip is required to be supported by HTTP 1.1 protocol, which includes most modern browsers. The bug in IE 6 that is referenced by the ThinkVitamin article affects not just gzip content, but other content as well. People are far better off using gzip than not.

Also, regarding your comments on caching you need to be careful about what HTTP 1.1 caching commands you use because users could be going through proxies that are HTTP 1.0 resulting in odd/unexpected behavior.

Hi Trevin, thanks for the comments.

1) Regarding gzip compression, I agree that it is extremely useful (in fact, I’m researching the best way to turn it on for InstaCalc).

One problem with IE6 is that it says it accepts gzip’d content but may have problems decoding it:

http://www.google.com/search?q=ie6%20gzip%20problem

http://support.microsoft.com/default.aspx?scid=kb;en-us;Q312496

As a result, webmasters serving gzip’d content have to resort to hacks like detecting the browser user-agent and returning regular content to IE6, even if it says it can accept compressed content:

http://httpd.apache.org/docs/2.0/mod/mod_deflate.html

These tricks can be done, but may be tough for a newbie. Of course, we can just take a scorched earth approach and let IE6 choke if it can’t render the page, but this is tough stance to take given that IE6 still has a large fraction of browser share.

However, I agree with you that output compression is extremely valuable. In my tests it shaves over 2/3 of the bandwidth, so I really, really want to enable it (and find a suitable workaround for IE6).

2) Yes, caching can be tricky as well. As I followed these topics down the rabbit-hole I’m seeing more of the intricacies here.

In general though, it appears to me that an old HTTP 1.0 proxy won’t cache something a new HTTP 1.1 header is set. You might not get the performance benefit when using an old proxy, but I’m not sure what other impact there would be.

Both of these are probably topics for a follow-up article :)

[…] Net Tab: Find the download performance of your page. […]

[…] How to Optimize Your Site with GZIP Compression Compression is a simple, effective way to save bandwidth and speed up your site. I hesitated when recommending gzip compression when “speeding up your javascript”:/articles/speed-up-your-javascript-load-time/ because of “problems”:http://support.microsoft.com/kb/837251 “in”:http://support.microsoft.com/kb/823386 “older browsers”:http://schroepl.net/projekte/mod_gzip/browser.htm. But it’s 2007. Most of my traffic comes from modern browsers, and quite frankly, most of “my users”:http://instacalc.com are fairly tech-savvy. Google and Yahoo use gzip compression. A modern browser is needed to enjoy modern web content and modern web speed — so gzip encoding it is. Here’s how to set it up. h2. Wait, wait, wait: Why are we doing this? Before we start I should explain what content encoding is. When you request a file like

http://www.yahoo.com/index.html, your browser talks to a web server. The conversation goes a little like this: 1. Browser: Hey, *GET* me /index.html 2. Server: Ok, let me see if index.html is lying around… 3. Server: Found it! Here’s your response code (200 OK) and I’m sending the file. 4. Browser: 100KB? Ouch… waiting, waiting… ok, it’s loaded. Of course, the actual headers and protocols are much more formal (monitor them with “Live HTTP Headers”:/articles/how-to-debug-web-applications-with-firefox/ if you’re so inclined). But it worked, and you got your file. h2. So what’s the problem? Well, the system works, but it’s not that efficient. 100KB is a *lot of text*, and frankly, HTML is redundant. Every, andtag has a closing tag that’s almost the same. Words are repeated throughout the document. Any way you slice it, HTML (and its beefy cousin, XML) is not lean. And what’s the plan when a file’s too big? Zip it! If we could send a .zip file to the browser (index.html.zip) instead of plain old index.html, we’d save on bandwidth and download time. The browser could download the zipped file, extract it, and then show it to user, who’s in a good mood because the page loaded quickly. The browser-server conversation might look like this: 1. Browser: Hey, can I *GET* index.html? I’ll take a compressed version if you’ve got it. 2. Server: Let me find the file… yep, it’s here. And you’ll take a compressed version? Awesome. 3. Server: Ok, I’ve found index.html (200 OK), am zipping it and sending it over. 4. Browser: Great! It’s only 15KB. I’ll unzip it and show the user. The formula is simple: Smaller file = faster download = *happy user*. Don’t believe me? The HTML portion of the yahoo home page goes from 101kb to 15kb after compression: h2. The (not so) hairy details The tricky part of this exchange is the browser and server knowing it’s ok to send a zipped file over. The agreement has two parts * The *browser sends a header* telling the server it accepts compressed content (gzip and deflate are two compression schemes):Accept-Encoding: gzip, deflate* The *server sends a response* if the content is actually compressed:Content-Encoding: gzipIf the server doesn’t send the content-encoding response header, it means the file is not compressed (the default on many servers). The “Accept-encoding” header is just a request by the browser, not a demand. If the server doesn’t want to send back compressed content, the browser has to make do with the heavy regular version. h2. Setting up the server The “good news” is that we can’t control the browser. It either sends theAccept-encoding: gzip, deflateheader or it doesn’t. Our job is to configure the server so it returns zipped content if the browser can handle it, saving bandwidth for everyone (and giving us a happy user). In Apache, “enabling output compression”:http://httpd.apache.org/docs/2.0/mod/mod_deflate.html is fairly straightforward. Add the following to your .htaccess file: […][…] I’ve been on a web tweaking kick lately: how to speed up your javascript, gzip files with your server, and now how to set up caching. But the reason is simple: site performance is a feature. […]

[…] Speed Up Your Javascript Load Time | BetterExplained (tags: firefox Web) Posted by ovo60 Filed in Uncategorized […]

[…] Speed Up Your Javascript Load Time | BetterExplained (tags: JavaScript optimization performance compression development Web) […]

[…] Link […]